More and more companies reevaluate their presence in the cloud and the on-premise data center. And some who pledged to move most of it or even all of it into the cloud have seen that it is not one or the other it is a mixture of both. To read up on why I think both have their advantages have a look at this blog (https://www.opensourcerers.org/2022/11/07/the-onpremise-datacenter-is-dead-or-isnt-it/). All of the mentioned advantages and disadvantages of both are still true. But something has changed in the meantime. It has become easier to ensure that the cloud can be used in a secure way almost the same as an on-premise installation.

Confidential computing and its value for the on-premise data center

Confidential computing is a way to ensure that no external party can look at your data and business logic while it is executed. It looks to secure Data in Use. When you now add to that the already established way to secure Data at Rest ( encryption of data stored to file/databases ) and Data in Transit ( secured connection between areas where data is stored and where data is executed ) it can be ensured that most likely no external party can access secured data running in an confidential computing environment.

In discussions with customers 6 general use cases could be seen. Have a look at this blog to get an explanation of the use cases (https://www.opensourcerers.org/2024/05/20/confidential-computing-in-action/). Of those 6 use cases especially the Secure Cloud Bursting scenario is important for this blog. This use case allows for a component running in the on-premise data center to dynamically be executed in the public cloud while retaining its secure requirements.

Adding enhanced Security to the public cloud

To be able to execute services in the cloud as it was discussed in the last blog, the company needs to be sure that the data and the business log cannot be accessed from third parties. It needs to be protected. Or better it needs to be executed in the trusted compute base (TCB) of the company. This is the environment where specific security standards are set to restrict all possible access to data and business logic. With the use of Confidential Computing the TCB can be extended to also incorporate the public cloud. Through attestation it is verified that a confidential environment is running in the public cloud and it can be trusted to implement all the security standards necessary for running in the TCB.

3 types of confidential computing

There are 3 types of confidential computing implementations available. Each of them has a different set of use cases in which it can be used. All together enable companies to extend all of their workloads into the public cloud. The before mentioned 6 use cases can all be implemented by one of the following scenarios. Starting with the Confidential VMs.

Confidential VMs

Most companies are using virtual machines to host legacy services. Some of those might be migrated to a container based platform and some might never be migrated. This means that for the foreseeable future the company needs a way to host VMs. With confidential VMs it is possible to dynamically create VMs in the public cloud to expand the available compute power of the on-premise infrastructure with a public cloud instance using Confidential Computing.

Confidential Cluster

Especially for infrastructure in the scenario of Digital Sovereignty a whole cluster including the applications deployed on it needs to be able to move from one cloud provider to another. Confidential computing enforces certain security standards. So confidential computing acts like a wrapper surrounding the cluster. With it and a standardized platform like OpenShift it is easier to implement such a move. Looking at the before mentioned blog it is also an easier option in case of systemic growth. A whole cluster can then be moved to a confidential cluster into the public cloud until the new hardware component is integrated into the on-premise infrastructure. And then the cluster can be moved back on-premise.

Confidential Containers

If the company needs to extend single applications into the cloud confidential containers are the perfect choice. Especially in the context of the scenario of Dynamic Cloud bursting and seasonal peaks. Here the execution of an application or a component of the application is limited by the on-premise infrastructure. It or even only parts of it can then be moved into the cloud taking advantage of the flexibility and consumption based pricing of the public cloud. Especially interesting is it when the execution of this components needs hardware which is not readily available on-premise like GPUs. When those limited resources are again available on premise the load can move back to the on-premise data center.

What has changed from the last blog?

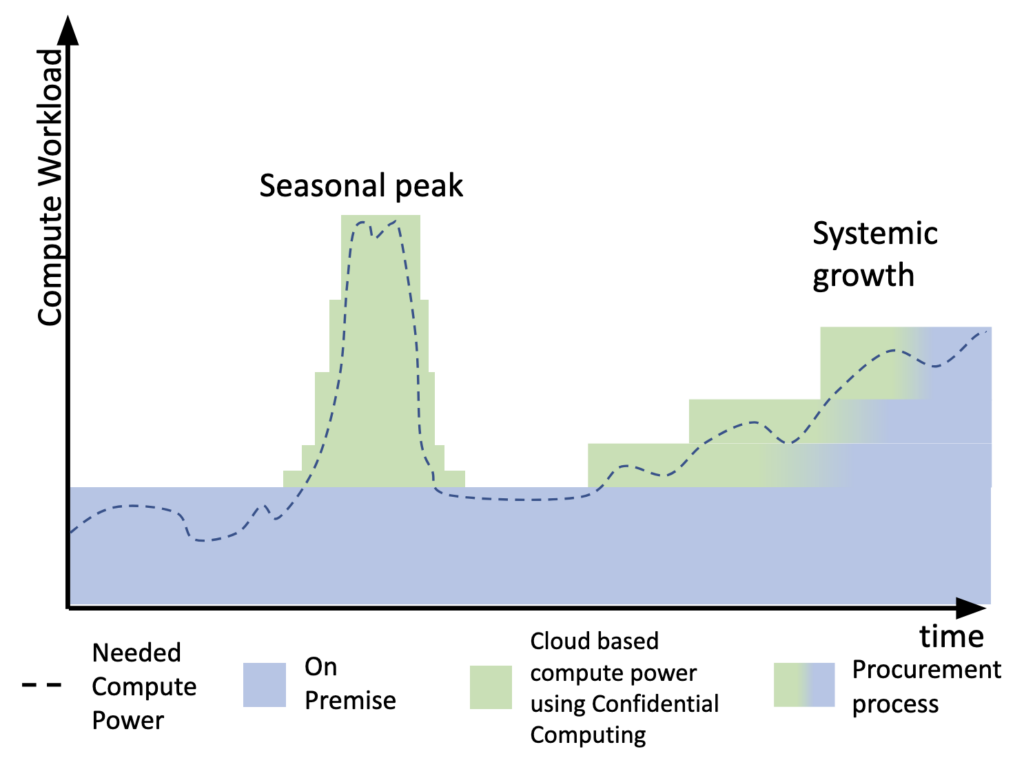

Back to the scenario explained in detail in the previous blog and its main graphic.

Like with the initial version of the blog whenever the on-premise infrastructure is limited by missing compute power now a confidential component can be started to take over the execution until the on-premise environment has resources available to take back the workload. In both scenarios seasonal peak and systemic growth confidential computing enables a secure way to use public cloud resources.

Summary

So in this blog you have seen that choosing a combined approach of an on-premise and public cloud infrastructure has increased advantages. But with the integration of confidential computing and its different types companies are now able to extend all of their infrastructure components into the public cloud with the same security standards as the on-premise installation.