Problem Summary

At the start of spring 2022, I was asked to assist in untangling a very escalated set of related cases with one of our customers. Their cluster had been experiencing stability issues over a period of several months. Initial analyses by support seemed to suggest resource problems, in particular insufficient memory. There were many instances of containers being terminated through the OOM-killer mechanism (OOM is Out Of Memory). However, in the case of this cluster, the OOM-killing seemed to cause cascading node failures, which could not be accounted for by initial analyses.

What is OOM killer and why should you care?

OOM-killer is a standard Linux kernel mechanism that protects the system’s stability by means of killing enough processes to give back sufficient resources to allow it to stabilize. OpenShift relies on this system to terminate containers that attempt to allocate more memory than they have been allotted. The enforcement of these limits is done by a kernel feature called cgroups. These can be used for both memory and CPU, and other resources that a process may consume during its lifetime.

Kubernetes, and by extension, OpenShift, schedule processes to nodes according to availability of resources. Each node will have X amount of total resources, let’s say 16 CPU and 32GB RAM. Of this, a certain amount is required to simply run the infrastructure components, like crio, kubelet, kubeproxy, the network operators, and so forth. This amount is known as ‘system-reserved’. Red Hat has a Knowledge Base article on how to calculate this for clusters prior to 4.8. In OpenShift 4.8, this is done by OpenShift itself. To calculate the allocatable amount, the node takes the system total, and subtracts the system-reserved amount. This value is the piece of pie that can be sliced further and given to the individual pods.

How Kubernetes schedules pods

When a pod is scheduled, the Kubernetes scheduler takes a look at the pod’s requested resources. It will check these against what is available on its Ready nodes, and try to spread the pods as evenly as possible, considering any affinity and anti-affinity rules, and other constraints. When it does the check for available resources, it does not take into account any values assigned to the pod’s limits. The policing of the limits is left to the kernel of the node that the pod is scheduled on. This is important because requests and limits cannot be used as a min/max resource indication. The limits are there to protect the integrity of the other pods, and in fact, the entire cluster itself.

Elastic and non-elastic resources

While on the surface both CPU and memory may appear as similar resources, they can be split into elastic and non-elastic resources. Under load, the amount of CPU time allocated to a process or pod can be made smaller or bigger, depending on how many other processes are competing with it. This ability to simply allocate smaller slices is referred to as elastic. Memory, however, does not benefit from this. It is a finite resource that does allow itself to be sliced into smaller chunks, like the CPU. This is called non-elastic. The same applies to storage access and storage space. The former being elastic, and the latter being non-elastic; you can squeeze a process into smaller slices of I/O access, but you can’t put more bits in a single physical byte.

Great, but what does this all mean?

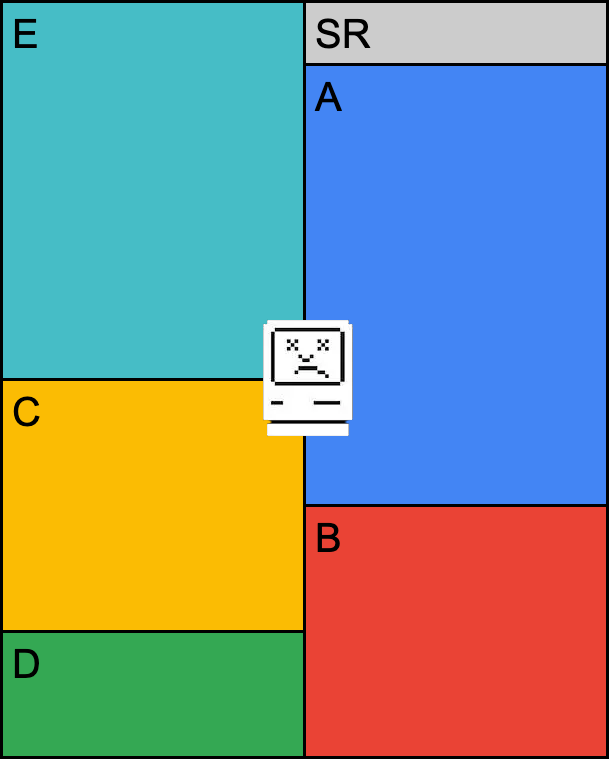

When the must-gather logs and our custom data scrapes were analysed it was found that many nodes were oversubscribed. In some cases, the pods were requesting 128MB, with a limit of 2GB, and were running at around 600MB. That is 469% more than what Kubernetes was initially told to keep in mind when scheduling this pod. Many other examples like this one existed on this cluster. One would think that this is not an issue, surely, there is more memory elsewhere? You would be right in saying that. However, that is not where the problem lies. Below is a simplified example of what might happen.

Pod A: Request: 400MB, Limit: 1GB

Pod B: Request: 200MB, Limit: 500MB

Neither Pod A, nor Pod B are at their limit, but are using more than their initial requested amounts:

Pod A: runtime usage: 600MB

Pod B: runtime usage: 400MB

Pods C and D are scheduled and started on the node

Pod C: Request: 200MB, Limit: 2GB

Pod D: Request: 200MB, Limit 1GB

Allocatable left after is 600MB. Everything’s still fine.

Pod C has increased its memory usage to 400MB, but is still within its limits.

Allocatable memory has decreased to 400MB.

Pod E needs to be scheduled.

Pod E: Request 200MB, Limit 1GB

Kubernetes scheduler determines that this is still fine, as the requested size still fits within the 400MB allocatable.

Node is left with 200MB allocatable.

None of the pods have reached their limits. Kubelet has no reason to terminate any of these pods.

Pod E has increased its memory usage, but is still within its limits. Allocatable is now 0, and it has crept into the system reserved space. System stability is now compromised.

Multiple scenarios can happen:

Kubelet still has some control, and manages to kill pods. However, the OOM-killing will resume as soon as the pods go back to their run-time size.

In the other scenario, kubelet has no control, and the kernel starts the OOM killing of processes, which could include any number of essential processes, such as kubelet, operators, crio, rendering the node NotReady. In this scenario, the control plane will attempt to reschedule the pods to another node.

The second node is stable, and has 1GB allocatable, since 1GB is already being used by pods X, Y and Z.

The control plane sees that there is plenty of space for pods A through C that were recently killed and need to be restarted.

Pod A: Request: 400MB, Limit: 1GB

Pod B: Request: 200MB, Limit: 500MB

Pod C: Request: 200MB, Limit: 2GB

That’s a total requested amount of 800MB, well within the allocatable space.

The pods are scheduled, and at the start they may be fine, but as soon as they start really running, they will all balloon to their previous steady-state size, which is well beyond their initial request.

The pods have ballooned and are encroaching on the System Reserved space. The death of the node is imminent.

Changing the pod eviction settings for kubelet would not solve this problem if the memory changes are too rapid for kubelet to respond to. kubelet polls the memory usage periodically, and decides a pod’s fate after that. However, if the change happens quickly enough, it will be the automatic OOM-killer that gets kicked in by the Operating System, in an attempt to preserve stability, not kubelet. If the limits are large enough, it won’t be the cgroup’s limit that will be hit either, it will be due the OS’s lack of memory.

The problem can also arise with CPU usage, but in this case, the scenario will play out a bit differently. If the limits for the CPU usage are out of proportion with the initial request, you can get a case where kubelet and kubeproxy can become so starved for CPU time that they are unable to communicate back and forth with the control plane.

The control plane, in that case, will mark the node as NotReady, and schedule the pods that were running on that node to other nodes. However, the old node will not know about this, and will not take down the pods, as it has received no message to do so. When the node does become responsive again, there will be duplicate loads across the cluster, which could cause data corruption, or other unforeseen issues.

Mitigation and recommended next steps

So, what can be done to stop these badly behaving pods? The cluster can be protected against misconfigured request and limit amounts by setting the maxLimitRequestRatio using quotas. This will initially cause a lot of pods to fail to be scheduled, but it will force developers to reexamine the requested resource amounts, and correct them.

During the discussions with the customer, it became apparent that the key to stopping this kind of cascading failure is developer Technical Enablement. Without awareness of the consequences of badly configured requests and subsequent changes in development methodologies, the problems were bound to reoccur. It was proposed to phase in stricter request and limit ratios through a multi-step approach:

- Educate the developers to better understand what requests and limits are, and how they are used by the scheduler. Teach them the importance of getting them right, and how to do so, by using usage data in Prometheus.

- Modify the CI/CD pipelines to analyse the pod specs and alert the developers if excessive ratios are detected. Initially at least, these warnings should not break the build, the objective is to create awareness, not hamper deployment and create resistance.

- After a set period of time, introduce generous (1:4) ratios, followed by ever decreasing values, with intervals between each step, to allow developers to adjust their specs. That period is to be determined by the customer’s application stakeholders and operations team.

- Have a policy of “request:limit ratio is 1.0 unless there is a good business reason.”

- Migrate loads that are periodic to tasks with accurate resource requests and limits, so they can be scheduled appropriately.

TL;DR

Requests and Limits should never be treated as min/max requirements for any pod. The requested amount should be a reflection of the pod’s required resources (both CPU and memory) under load. The ratio between request and limit should be as close to 1.0 as possible. Any divergence from this should have a valid business reason. The request amount reflects the pod’s normal usage, the limit is there as a safety net to protect the rest of the cluster’s load, in case it misbehaves, or a mistake was made during the profiling of the application.