I’ve been with Red Hat since 2019 as a Solution Architect. I started in the area of IT in 2001 only to experience, just 2 years later, that we can use ONE physical server to deploy MANY Operating Systems!

At that time we deployed our very first VMware vSphere cluster in a medium-sized company in Trier, my hometown. This was the first time I have realized that we are capable of deploying several workloads on just one Bare Metal server. I was thrilled!

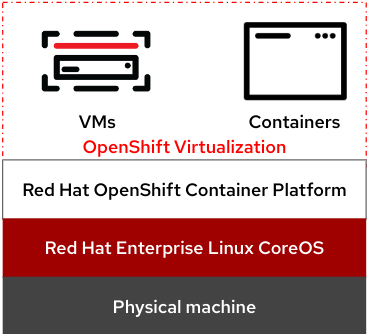

And nowadays there is OpenShift Virtualization, a Platform to accommodate both, containers AND virtual machines alike. Originally intended as a pure container and development platform (just OpenShift, without “Virtualization”), it is now extended by some API’s and technical extensions within the CoreOS Operating System.

We can even combine setting up virtual machines and containers on just one platform – OpenShift Virtualization based on KVM, a game changer in the market.

VMs and containers on one single platform with single management capabilities.

This is great, especially where I have to combine both harmoniously & both using the same storage and network paradigm.

End-Of Life of RHV & Acquisition of VMware

As most of us know already, Red Hat announced that it will end the support of their previous product “Red Hat Virtualization” (formerly known as RHV or before that known as RHEV) and OpenShift Virtualization represents the direct successor to this product to take over the workloads.

Also the acquisition of VMware by Broadcom causes many companies to rethink and reconsider their virtualization strategy. In this article here we will discuss OpenShift Virtualization (abbreviated OCP+V) as one alternative to legacy virtualization like RHV/RHEV and VMware vSphere.

OpenShift Virtualization is based on the “Container-Native-Virtualization” technology

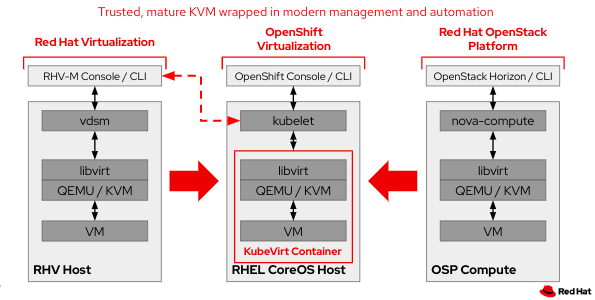

Based on this technology and our core principle upstream first, OCP+V is being developed upstream under the KubeVirt project, a sandbox project in the Cloud Native Computing Foundation (CNCF). The KubeVirt Project was started by Red Hat already some years ago and with the release of OpenShift 4.5 in September 2021 it was seen as GA (General Availability) in OpenShift for the first time.

Our Virtualization Integration in OCP delivers a real Container-Native-Virtualization experience and is therefore a real Type1 Hypervisor. KVM wrapped in a modern style of management

It leverages, at the most fundamental level, the so-called “Kernel-based Virtual Machine” (KVM)” within CoreOS, the Linux Operating System that is used to deploy Openshift on top.

This means a virtual machine is NOT a Container, it runs in a container, is kinda wrapped in a container, but it is still a regular Linux process like an VM in RHV or Red Hat Openstack, both based on KVM. As a container is only a namespace, it adds no additional layer to a VM running on the Linux kernel.

So there is no performance penalty by running a VM within a container. Very simply expressed, Red Hat only provides another way to create and manage virtual machines, side-by-side with containers, based on KVM.

What was I actually thinking 3 years ago as we introduced OpenShift Virtualization?

To be honest, I was bothered and confused since I loved our RHV (Red Hat Virtualization) and I really got used to it. It always stands for a very reliable & stable solution, including an incredibly comfortable Web UI to operate the RHV Cluster in Day1 and Day2.

I even thought this was the end of Virtualization for Red Hat. Really, this was my firm opinion. And that is how you can be mistaken… :).

In the meantime, after 3 years of development and integration, everything that defines Red Hat came together into one platform. Now I understand how OpenShift Virtualization works and how it leverages the same trusted KVM-based technology Red Hat has been providing and using for 17 years now. I think I got it, I got it “how” OpenShift Virtualization can help us to simplify our everyday life when it comes to containers and VMs.

But wait, very briefly, what is OpenShift Virtualization and why should we use it?

The virtualization Integration is a main feature of Red Hat OpenShift. It is actually not an add-on or an separate product, it is just OpenShift with an additional operator on top. OCP+V is included with all entitlements. I do not need to subscribe/license it separately. Means all current subscribers of OpenShift (Also subscribers of the OpenShift Kubernetes Engine) receive OpenShift Virtualization without incurring any costs as part of their OpenShift subscription.

As briefly described further above already it leverages the KVM hypervisor, which is a very mature and highly a robust open-source hypervisor used by companies around the world and cloud service providers globally. It has been under development for over 17 years by now.

OpenShift Virtualization leverages all the well known Kubernetes paradigm and thus allows the VM to be managed by Kubernetes and the KubeVirt Operator. An OpenShift Virtualization VM uses Kubernetes for scheduling, networking, storage infrastructure and many other Kubernetes means and tools. This means of course also the ability to connect to and manage those VMs using all the Kubernetes-native methods we know from the container world and take advantage of OpenShift features and add-ons like Pipelines, GitOps, Service Mesh, and many more.

OCP+V includes entitlements for unlimited virtual RHEL guests. Guest licensing for other supported operating systems like Windows will need to be purchased separately.

Our product is SVVP (MS Server Virtualization Validation Program) certified with Microsoft for Windows guest support. Means we can install anything between wink12 – wink22 with OCP+V.

Support for AWS / ROSA has already been announced, and we are in the process of adding support for OpenShift Virtualization for additional Managed OpenShift Cloud services like Microsoft Azure (ARO).

Hmmmm…. And what is the main strategy behind OpenShift Virtualization?

Red Hat’s strategy for the future is to take care of all the traditional and legacy workloads in the company and these consist of virtual machines. But many, if not most companies and customers are at least migrating parts of their legacy VMs in containers already. Therefore OpenShift as PaaS (Platform as a service) is a great platform to provide virtualization leveraging the same controlplane and the same management as we do with containers.

This picture shows that OCP+V is a great choice for having both VMs and containers and also an excellent platform to use it for a long term migration plans from VMs to containers since you may have workloads that are hard to migrate and with one common platform, just take the time it needs!

Are we trying to replace other Virtualization Solutions in companies?

Yes, we do! We are working with the goal in mind to replace major On-Premise solutions available on the market, but always having in mind the use case, and the mindset of our customers, both should fit here. Otherwise a migration or a switch to OCP+V will probably fail.

The solutions we are aiming to replace in regards of legacy virtualization are:

- Red Hat Virtualization aka RHV aka RHEV aka oVirt

- VMware vSphere

- Citrix Hypervisor

- Microsoft Hyper-V

- OpenStack (At least some of the use-cases which OpenStack cannot cover)

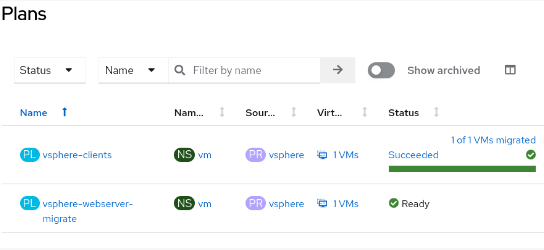

I’m sure you are now asking yourself “And how am I supposed to migrate my fleets of VMs to OCP+V now?”. There is an answer in the drawer already. We’re using MTV for this. And no, MTV does not stand for “Music Television”, my favorite TV Channel from the 90’s, but for “Migration Toolkit for Virtualization”.

But of course, OpenShift Virtualization is NOT just a simple replacement but it really encourages you to change to new operational models including the adaption to Kubernetes principles, DevOps and automation.

Can I migrate from VMware and other virtualization solutions to OCP+V?

MTV is your best buddy when it comes to migration schemes. You can create migration plans and run them on appropriate times.

With this tool we just can clear up the prejudices that are often attributed to us when it comes to migration capabilities from other software vendors. We can just create a bulk migration plan, we also can create a storage and a network mapping from the source targets to the destination targets.

Ok, it looks like something we could think about. But which requirements are in place, what do we need to deploy OpenShift Virtualization ?

Before you install OpenShift Virtualization we have to take care of the following requirements.

- CPU requirements: All 64bit X86 AMD and Intel CPUs with Hardware Virtualization capabilities

- Storage requirements

- Storage has to be supported by OpenShift. Like with VMware, external storage vendors like DELL, HPE are responsible for supporting their storage solution in OCP+V. Therefore you won’t find them in the Red Hat Supporting Documents, but nearly all well-known vendors are supporting OpenShift of course.

- A CSI provisioner is strongly encouraged. CSI stands for Container Storage Interface and means the Integration of features and drivers from the storage vendors like DELL, IBM, Azure, AWS,… These features might be “CSI Cloning, CSI Resize, Volume Snapshots” and so on.

- ReadWriteMany (RWX) PVCs are required for live migration. This is the case with almost supported hardware storage vendors already.

Customers ask, we listen – when it comes to features and their comparability to VMware and RHV.

Most operators and administrators tend to make a direct comparison to their current virtualization landscape. Like VMware vSphere, Hyper-V from Microsoft or Citrix XenServer / Citrix Private Cloud.

Many features have been implemented already.

What we always want to avoid is some kind of feature shootout, this is not what OCP+V is about. Sometimes the use case fits, sometimes it doesn’t. But most of the time it fits – and with more to come in the upcoming months and minor Versions of OCP+V, it remains exciting, so stay tuned!

Here some of the feature which already implemented in OCP+V/KubeVirt

When a VM is powered on we can do:

- Live Migration from one host to another

- Live changes of disks and nics

- CPU live resizing

- HA for cluster, hosts and workloads

- Fine grained Access Control

- Fine grained Network Policies and Micro Segmentation

- Tenant Separation

- Integration with AD/LDAP/oauth/…

- Quotas for Storage, Network, Compute

- Complete metrics and observability stack

- Metro-DR with ACM and ODF (and some 3rd party)

- Regional-DR with ACM and ODF

- And much more ….

Storage Integration Storage

- We can address a Direct LUN to a certain VM (Similar to Raw Device Mapping in VMware vSphere)

- DR & Re-Attaching Storage

- LUNs coming from iSCSI or FC adapter on the host (incl. mpath)

- Backup/Restore with OADP (OpenShift APS’s for Data Protection). Many Backup Partners are already validated and certified with OCP+V like:

Network Capabilities in OCP+V

- Multiple Networks. OpenShift Virtualization makes use of the so called “Multus CNI Plugin”. This Plug-In provides more than just the good old Container Interface (POD Network). Multus is a meta plugin to attach additional CNI for new network capabilities in OCP+V. That is an enormous asset for VMs to to connect an additional Interface, or more, to expose a server to the outside world or an internal VLAN.

- Real SDN for each project. Like in Openstack or in VMware vSphere, OCP+V also provides logical separate networks. It does it per Project/Namespace (In VMware it is called “Virtual Datacenter”)

Efficiency

- Scheduling/Re-Scheduling of Virtual matches at any time

- Memory Overcommitment is there, but I would not recommend this approach. Just believe me, this is rather evil. If you are diving you can’t share more air than you have. You will die. So do your VMs when they want to use the RAM that is not there at all.

Can we cover our legacy applications which are still very important to us. Can we just run it on OpenShift Virtualization?

In general, all legacy applications are really fine. There is no blocker since we leverage a technology, KVM, where we’ve been installed all sorts of applications for nearly 17 years now.

And the certification, like with Oracle DB’s, is mostly attached to the Operating System (Windows/Linux). Means we’re having the same support than VMware vSphere or Microsoft Hyper-V, without constraints.

There are sometimes VMs that have a certain set of requirements, like using real time kernel. These things need special attention and have to be discussed or tested.

An overview of a small selection of application that work very well with OCP+V

Generally all legacy applications are fine – with Linux or Windows

- All kind of Application servers

- All kind of Java applications

- Windows applications

- Linux Applications

Oracle DB (RAC)

- Oracle certifies for a specific OS (which is the RHEL or Windows VM). Thus has the same support as running Oracle on VMware vSphere.

SAP HANA

- No certification so far – but certified on our RHV which uses the same technologies as OCP+V (qemu, kvm, libvirt).

- We are working on it, so stay tuned! In DEV and Test env we already have many environments.

And of course, VMs that will be split up into microservices/containers to pave the path for the Infrastructure Migration Journey towards containers.

Summary and Conclusion

Red Hat OpenShift Virtualization is not a completely new thing. Since it is based on the upstream open source project KubeVirt which has been around for a few years now. It stands for a very mature solution when it comes to Bare Metal Type1 Hypervisors. It keeps its promises and brings the reliability, the scalability & self-healing capabilities. Besides that, a rich resource management tool kit that especially VMware operators expect from traditional hypervisors.

On the other hand we are using a holistically developed Kubernetes paradigm. These are tools that many of you are already familiar with. This means most of us will already feel quite comfortable with all the tools and means at our disposal with OCP+V.

As I first took a glance I really was a bit confused and asked myself “What the heck, what are we doing here? Why would a traditional Hypervisor operator go down all this Kubernetes rabbit hole”? But after diving deeper into the technology and its development I realised, that any traditional Hypervisor operator, who thinks he will do this job for the next 20 years must be out of his mind! Face the future – it does not lie in Virtualization – and adapt to the world of Containerization, Automation, AND Virtualization. OpenShift is the best platform to do so.

And with OCP+V, this actually has become not that complicated since we only need to install an Operator inside the already existing OpenShift Cluster.

In the picture below, from the OpenShift web console, select Operators, OperatorHub, and then search for OpenShift Virtualization. It is really that easy… But with installation, practical stuff, getting our hands dirty, this we are going to explore in a second article on opensourcerers.org. See you soon!

URL’s and sources

About the Requirements – Review the documentation here

OCP+V on a managed OCP Service on a public cloud providers (AWS/ROSA)

CSI drivers supported by OpenShift Container Platform

OpenShift Virtualization demos

The Migration Tool Kit for Virtualization

OpenShift Virtualization: Not as scary as it seems – by Matthew Secaur

Blog – What is OpenShift Virtualization?

OpenShift Virtualization Architecture

Configuring your cluster to place pods on overcommitted nodes

OpenShift Virtualization for vSphere Admins: Network Configurations