We all know the limitations of building multi-architecture images on an infrastructure that is not providing all the expected CPU architectures. We also know that emulation of those architectures is something that can help us out of that dilemma.

Unfortunately, switching CPU architectures within a highly restricted environment like an OpenShift cluster is hard to achieve and more often it also breaks supportability, as those changes are affecting the whole cluster and not just a single build process.

During my journey in various technologies, I often have the opportunity to create technical proof of concepts and if I am really lucky, an idea will then be reworked by specialists and finally end up on our desks free to use.

Now, let me explain how I achieved getting foreign CPU architecture images being built within an Red Hat OpenShift cluster without modifying SELinux on Cluster level and therefore invalidating the support for it. Please note that the following method is not officially supported by Red Hat.

Prerequisites and constraints

The following exercise is not for you if any of the following items conflicts with your security policies:

- A physical or virtual system with SELinux in permissive mode (1)

- Plain text communication between the OCP cluster and your build system (2)

- Podman instead of buildah, as the latter lacks the capability to delegate to other systems.

- VirtualMachinePools(v1alpha1) instead of defining and scratching a VirtualMachine each and every time.

- Unfortunately, the SELinux domain transition between different architectures is not something any policy currently grants, which makes it mandatory to not enforce SELinux on the system where you are going to emulate other architectures. Still, I encourage you to not disable SELinux, but set it into permissive mode instead.

- With the focus on highly automated and virtualized build systems, using hardcoded SSH keys is not impossible, but this would complicate the scenario in this context without adding much gain regarding in-cluster/in-namespace communication.

For everyone still willing to follow along, the requirements for the OpenShift cluster are as follows:

- Installation of the following Operators:

- Red Hat OpenShift Virtualization

- Red Hat OpenShift Pipelines

- We are using an explicit namespace called multi-arch-build, but you can adjust it if you want to use a different one instead.

- The resulting image used in the build process uses a private git repository as the source which populates a mockbin service. Make sure to adjust the git source according to your needs.

Build system preparation

For our use-case, we are focusing on OpenShift Virtualization and in particular on ephemeral build systems that we can scale and scratch as needed.

I personally prefer ephemeral containerDisk virtual machines for being more robust on modifications compared to cloud-init modifications. Not having the need to additionally check if the system has finished preparing may be an advantage like not depending on your Internet download speed, but for the purpose of writing a readable article, we are going the cloud-init way.

Let’s create the VirtualMachinePool for our build VMs.

kind: VirtualMachinePool

apiVersion: pool.kubevirt.io/v1alpha1

metadata:

name: buildsys

namespace: multi-arch-build

labels:

kubevirt-manager.io/managed: "true"

kubevirt.io/vmpool: buildsys

spec:

paused: false

replicas: 4

selector:

matchLabels:

vmtype: buildsys

virtualMachineTemplate:

metadata:

name: buildsys-pool

labels:

vmtype: buildsys

kubevirt-manager.io/managed: "true"

kubevirt.io/vmpool: buildsys

spec:

running: false

template:

metadata:

name: buildsys

annotations:

vm.kubevirt.io/flavor: small

vm.kubevirt.io/os: rhel9

vm.kubevirt.io/workload: server

labels:

vmtype: buildsys

spec:

architecture: amd64

domain:

cpu:

cores: 1

sockets: 1

threads: 1

features:

smm:

enabled: true

firmware:

bootloader:

efi: {}

machine:

type: pc-q35-rhel8.6.0

devices:

disks:

- disk:

bus: virtio

name: disk0

- disk:

bus: virtio

name: cloudinitdisk

interfaces:

- masquerade: {}

name: default

networkInterfaceMultiqueue: true

rnd: {}

machine:

type: q35

resources:

requests:

memory: 2Gi

networks:

- name: default

pod: {}

volumes:

- containerDisk:

image: registry.redhat.io/rhel9/rhel-guest-image:latest

name: disk0

- cloudInitNoCloud:

userData: |

#cloud-config

user: cloud-user

password: changeme # or omit to remove login access at all

chpasswd: { expire: False }

rh_subscription:

username: ....

password: '...'

packages:

- podman

- skopeo

runcmd:

- [ /usr/bin/curl, -o, /usr/bin/qemu-arm-static, -L,

https://github.com/multiarch/qemu-user-static/releases/download/v7.2.0-1/qemu-arm-static ]

- [ /usr/bin/chmod, +x, /usr/bin/qemu-arm-static ]

- [ /usr/bin/chcon, -t, container_runtime_exec_t, /usr/bin/qemu-arm-static ]

- [ /usr/bin/podman, system, service, tcp://0.0.0.0:8888, --time=0 ]

name: cloudinitdisk

Here is a short explanation on what we are configuring as builder Pool.

First, we specify pre-configured replicas: 4 which will provide 4 scratch-able VMs for our pool. Don’t worry, those resources are only used if you spin a VirtualMachine instance out of that pool.

You might be puzzled by the fact that we are not using any PersistentVolumeClaim, but as mentioned, we want to be scratch-able, meaning rebooting the VM will scratch all data.

Instead of a PersistentVolume we use a containerDisk as boot and OS device registry.redhat.io/rhel9/rhel-guest-image:latest .

Now for the cloud-init we need to hardcode some values and cannot iterate as easily as with a --run option in virt-customize which is why we only add arm as additional architecture. With the help of qemu-user-static we register the binary format for arm architecture to use the corresponding emulator to run arm binaries.

Note: you should update the rh_subscription section with your username/password or activation-key.

All other options can be copied from an existing Template like the Red Hat OpenShift Virtualization template rhel9-server-small.

Last but not least, we expose podman on the VM through TCP. As mentioned, ssh is a valid and preferable option but it would mean that we need to define those machines at Pipeline startup by injecting the generated keys.

With a prepared so-called “Golden image”, we could remove all the run steps as well as package downloads making the spin up time way faster and more reliable.

Finally for the builder VMs we need to define a service our Pipelines will be able to connect to.

(for num in $(seq 0 3) ; do

cat <<EOF

apiVersion: v1

kind: Service

metadata:

labels:

kubevirt-manager.io/managed: "true"

kubevirt.io/vmpool: buildsys

name: buildsys-${num}

namespace: multi-arch-build

spec:

ports:

- name: podman

port: 8888

protocol: TCP

targetPort: 8888

selector:

vm.kubevirt.io/name: buildsys-${num}

sessionAffinity: None

type: ClusterIP

---

EOF

done) | oc -n multi-arch-build apply -f -Code language: JavaScript (javascript)Pipeline preparation

With the need to delegate our build inside the Pipeline run to a system that can change SELinux to permissive and emulate other architectures, we need to write our own Task for running the build steps.

Note: The Task is not taking care of sanitizing all possible scenarios and instead focuses on the particular use-case we describe here, i.e., if one of the stages fails, the whole build will also fail and we scratch everything (that’s something you can optimize for sure).

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: podman-multi-arch

namespace: multi-arch-build

spec:

params:

- default: 'tcp://buildhost.example.com:8888'

name: buildsys

type: string

- default: 'linux/amd64,linux/arm64'

name: platforms

type: string

- name: manifest

type: string

- default: ./Dockerfile

name: dockerfile

type: string

- default: 'quay.example.com/podman-multi-arch:build'

name: image

type: string

steps:

- env:

- name: BUILDSYS

value: $(params.buildsys)

- name: PLATFORMS

value: $(params.platforms)

- name: MANIFEST

value: $(params.manifest)

- name: DOCKERFILE

value: $(params.dockerfile)

- name: IMAGE

value: $(params.image)

image: registry.redhat.io/rhel9/podman

name: build-and-push

resources: {}

script: |

if [[ "$(workspaces.dockerconfig.bound)" == "true" ]]; then

# if config.json exists at workspace root, we use that

if test -f "$(workspaces.dockerconfig.path)/config.json"; then

export DOCKER_CONFIG="$(workspaces.dockerconfig.path)"

# else we look for .dockerconfigjson at the root

elif test -f "$(workspaces.dockerconfig.path)/.dockerconfigjson"; then

cp "$(workspaces.dockerconfig.path)/.dockerconfigjson" "$HOME/.docker/config.json"

export DOCKER_CONFIG="$HOME/.docker"

# need to error out if neither files are present

else

echo "neither 'config.json' nor '.dockerconfigjson' found at workspace root"

exit 1

fi

fi

cd workspace/source

podman system connection add buildsys ${BUILDSYS}

for retry in $(seq 1 30) ; do

podman -c buildsys ps && break

sleep 10

done

podman -c buildsys run --rm --privileged \

docker.io/multiarch/qemu-user-static --reset -p yes

podman -c buildsys build --authfile=${DOCKER_CONFIG}/config.json \

--platform=${PLATFORMS} --manifest ${MANIFEST} -f ${DOCKERFILE}

podman -c buildsys tag localhost/${MANIFEST} \

${IMAGE}

podman -c buildsys manifest \

push --authfile=${DOCKER_CONFIG}/config.json --all ${IMAGE}

workspaces:

- name: source

- description: >-

An optional workspace that allows providing a .docker/config.json file

for Buildah to access the container registry. The file should be placed

at the root of the Workspace with name config.json or .dockerconfigjson.

name: dockerconfig

optional: trueNote: The retry loop unfortunately is necessary as it takes some time to prepare the VM with cloud-init.

The rest of the steps are typical podman build steps, except that we first create a connection to our VM workload and delegate all following commands to be executed from inside the VM.

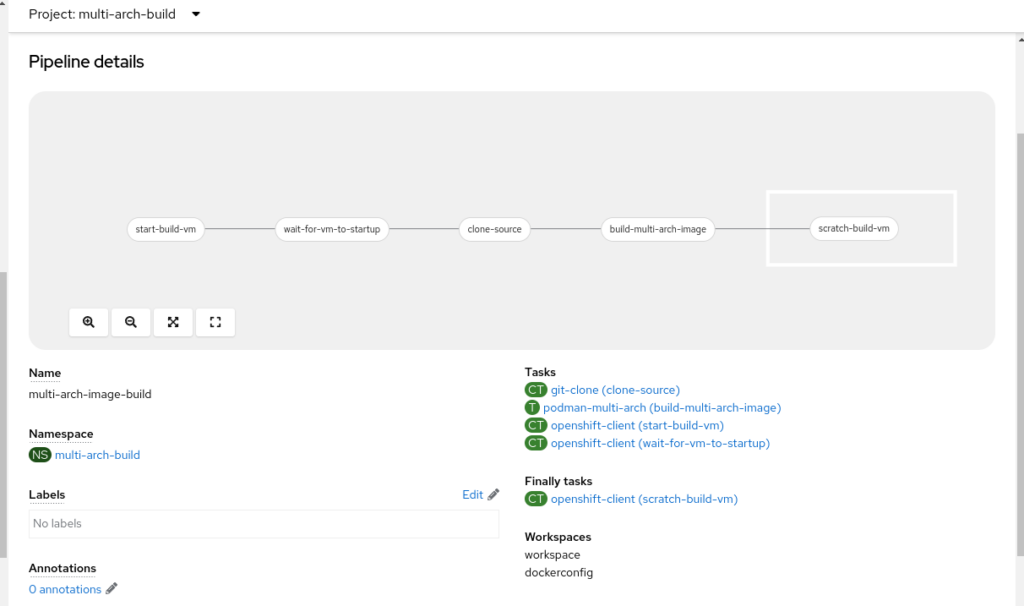

Our Pipeline definition follows the following steps:

- Start a build VirtualMachine instance

- Clone the git repository source

- Delegate the build from a Dockerfile to the VirtualMachine instance

- Finalizer step to scratch the VirtualMachine instance

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: multi-arch-image-build

namespace: multi-arch-build

spec:

finally:

- name: scratch-build-vm

params:

- name: SCRIPT

value: >-

oc patch vm/$(params.buildsystem) --type=merge -p

'{"spec":{"running":false}}'

- name: VERSION

value: latest

taskRef:

kind: ClusterTask

name: openshift-client

params:

- default: buildsys-0

description: Select an instance from the Pool

name: buildsystem

type: string

- default: 'quay.example.com/multi-arch-build:latest'

description: Provide an image name

name: image

type: string

- default: 'https://github.com/'

description: Provide a git source repository

name: source

type: string

- default: ./Dockerfile

description: Select a Dockerfile for build

name: dockerfile

type: string

tasks:

- name: clone-source

params:

- name: url

value: $(params.source)

- name: revision

value: ''

- name: refspec

value: ''

- name: submodules

value: 'true'

- name: depth

value: '1'

- name: sslVerify

value: 'true'

- name: crtFileName

value: ca-bundle.crt

- name: subdirectory

value: ''

- name: sparseCheckoutDirectories

value: ''

- name: deleteExisting

value: 'true'

- name: httpProxy

value: ''

- name: httpsProxy

value: ''

- name: noProxy

value: ''

- name: verbose

value: 'true'

- name: gitInitImage

value: >-

registry.redhat.io/openshift-pipelines/pipelines-git-init-rhel8@sha256:a652e2fec41694745977d385c845244db3819acca926d85a52c77515f1f2e612

- name: userHome

value: /home/git

runAfter:

- wait-for-vm-to-startup

taskRef:

kind: ClusterTask

name: git-clone

workspaces:

- name: output

workspace: workspace

- name: build-multi-arch-image

params:

- name: buildsys

value: 'tcp://$(params.buildsystem):8888'

- name: platforms

value: 'linux/amd64,linux/arm64'

- name: manifest

value: mockbin

- name: dockerfile

value: $(params.dockerfile)

- name: image

value: $(params.image)

runAfter:

- clone-source

taskRef:

kind: Task

name: podman-multi-arch

workspaces:

- name: source

workspace: workspace

- name: dockerconfig

workspace: dockerconfig

- name: start-build-vm

params:

- name: SCRIPT

value: >-

oc patch vm/$(params.buildsystem) --type=merge -p '{"spec":

{"running":true}}'

- name: VERSION

value: latest

taskRef:

kind: ClusterTask

name: openshift-client

- name: wait-for-vm-to-startup

params:

- name: SCRIPT

value: oc wait vm/$(params.buildsystem) --for=condition=Ready=True

- name: VERSION

value: latest

runAfter:

- start-build-vm

taskRef:

kind: ClusterTask

name: openshift-client

workspaces:

- name: workspace

- name: dockerconfigEven though the build process inherits the Cluster pull-secret for all configured registries, we need to create another fully-populated pull-secret composed of the Red Hat registries and any additional registry we pull content from, as this will be the secret used for the podman task which delegates it to a VM that doesn’t know any of them.

oc -n openshift-config extract secret/pull-secret | \

jq -r '.auths |= .+ {"quay.example.com":{"auth":"...","email":"..."}} ' > \

.dockerconfigjson_new

oc -n multi-arch-build create secret docker-registry multi-arch --from-file=.dockerconfigjson

oc -n multi-arch-build annotate secret/multi-arch tekton.dev/docker-0=https://quay.example.comCode language: JavaScript (javascript)Note: if your Git source requires authentication, make sure to add that secret to the pipeline ServiceAccount as well.

kind: Secret

apiVersion: v1

metadata:

name: github

namespace: multi-arch-build

annotations:

tekton.dev/git-0: 'https://github.com'

data:

password: ...

username: ...

type: kubernetes.io/basic-authCode language: JavaScript (javascript)Our pipeline ServiceAccount should now have all necessary secrets available for use afterwards.

apiVersion: v1

imagePullSecrets:

- name: pipeline-dockercfg-zl7kq

kind: ServiceAccount

metadata:

name: pipeline

namespace: multi-arch-build

[..output omitted..]

secrets:

- name: multi-arch # used for podman auth.json

- name: github # used for github authentication

- name: pipeline-dockercfg-zl7kq # image registry pull auth for the pipelineCode language: CSS (css)Finally, all we need to do now is to create a PipelineRun to kickoff the build.

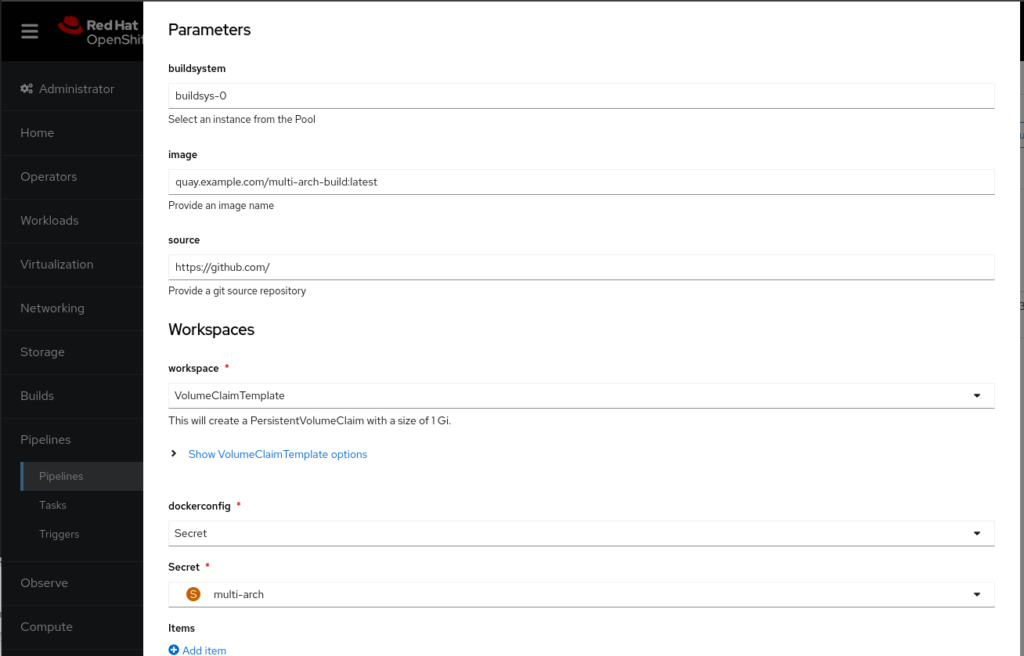

Our PipelineRun will look like this:

Note: the PersistentVolumeClaim is necessary to have the git source available at all stages. Feel free to change it to whichever storage you have at hand.

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

annotations:

chains.tekton.dev/signed: 'true'

name: multi-arch-image-build

namespace: multi-arch-build

finalizers:

- chains.tekton.dev/pipelinerun

labels:

tekton.dev/pipeline: multi-arch-image-build

spec:

params:

- name: buildsystem

value: buildsys-0

- name: image

value: 'quay.example.com/multi-arch-build:latest'

- name: source

value: 'https://github.com'

pipelineRef:

name: multi-arch-image-build

serviceAccountName: pipeline

timeouts:

pipeline: 1h0m0s

workspaces:

- name: workspace

volumeClaimTemplate:

metadata:

creationTimestamp: null

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: ocs-external-storagecluster-ceph-rbd

volumeMode: Filesystem

status: {}

- name: dockerconfig

secret:

secretName: multi-archCode language: JavaScript (javascript)

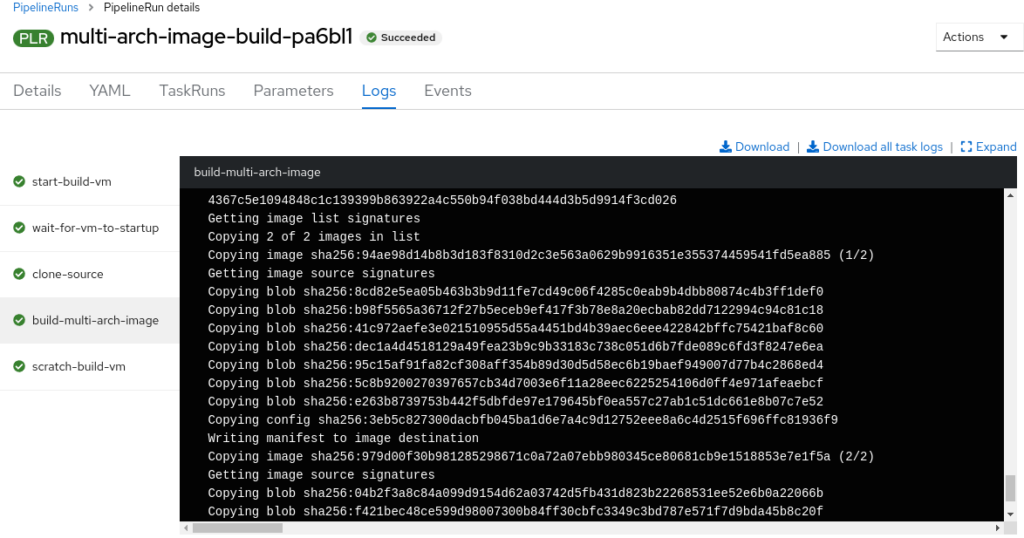

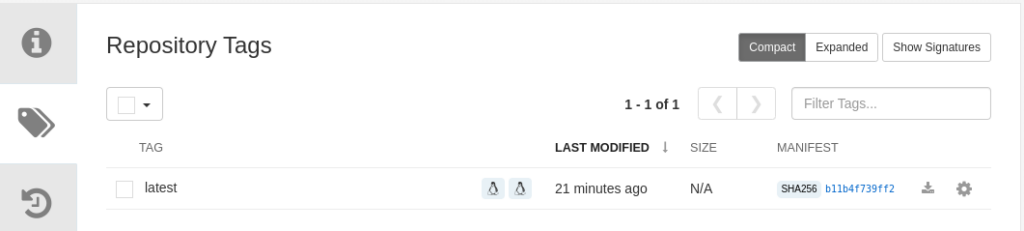

After a while you should see a successful build.

Now, let’s verify that the registry has a new image with two architectures available.

$ skopeo inspect --raw docker://quay.example.com/multi-arch-build:latest | jq -r

{

"schemaVersion": 2,

"mediaType": "application/vnd.oci.image.index.v1+json",

"manifests": [

{

"mediaType": "application/vnd.oci.image.manifest.v1+json",

"digest": "sha256:bd18451610cb4b41e80ec6fa07c320f74acda7992da58b0a80b9badda7ceeec9",

"size": 1563,

"platform": {

"architecture": "arm64",

"os": "linux"

}

},

{

"mediaType": "application/vnd.oci.image.manifest.v1+json",

"digest": "sha256:e3ecc42a7ea35fb4edc2eeefef89c9ac51e4fdaf31a7e46b72526d9d7144ab11",

"size": 1563,

"platform": {

"architecture": "amd64",

"os": "linux"

}

}

]

}Code language: JavaScript (javascript)If you want to ensure that the image for each architecture is working, you need to have privileges and SELinux disabled for the same reason as for building the image.

# sudo setenforce 0

# sudo podman run --rm --privileged multiarch/qemu-user-static --reset -p yes

$ podman run -ti --rm --platform=linux/arm64 --entrypoint /usr/bin/uname quay.example.com/multi-arch-build:latest -a

Linux 4e860e3e29ad 5.14.0-284.11.1.el9_2.x86_64 #1 SMP PREEMPT_DYNAMIC Tue May 9 05:49:00 EDT 2023 aarch64 aarch64 aarch64 GNU/Linux

$ podman run -ti --rm --platform=linux/amd64 --entrypoint /usr/bin/uname quay.example.com/multi-arch-build:latest -a

Linux 52a4c5c68e73 5.14.0-284.11.1.el9_2.x86_64 #1 SMP PREEMPT_DYNAMIC Tue May 9 05:49:00 EDT 2023 x86_64 x86_64 x86_64 GNU/LinuxCode language: PHP (php)

In case you want to know more details about containerDisk and ephemeral VirtualMachines, please feel free to comment or ping me accordingly.

Happy multi-arching!