After discussing the theoretical background why patterns are useful in common IT-architectures in our first post and discussing the various levels of utilizing patterns in section two, we will now dive a little deeper and look how a pattern can be applied for a practical business problem.

Requirements

Let us assume we have an application that needs to be rolled out at different sites around the world, possibly on different infrastructure and cloud deployments. It is very important that the application is consistent, wherever it is deployed and always in the same configuration and version.

There might be different infrastructures and cloud models as underlying layers that will probably make things difficult to manage. And distributed infrastructures have the tendency to run out-of-sync, when some deployments are temporarily disconnected or simply forgotten to be updated.

Planning and Information gathering

So at the high-level design phase we are looking for a pattern that brings a concept of managing applications at scale in distributed, cloud-like environments.

We already identified a portfolio architecture in our last post. The Hybrid Multi-Cloud Management Portfolio Architecture fits quite nicely with our requirements here. We can make use of OpenShift as a universal Kubernetes platform, and GitOps as a management paradigm as abstraction layers and techniques to fulfill our universal management requirements. The portfolio architecture also describes the interactions and roles of each of the needed components.

Deployment planning, the ‘how’

But when we come to the concrete steps of planning the actual deployment, we want to make use of already existing and tested deployment code in order to avoid writing and maintaining a lot of boilerplate code and reuse as much as possible. Only the small thin layer of our own application should be added.

To get to this level we switch further down to complementary Validated Patterns at https://validatedpatterns.io:

We see that the multicloud-gitops pattern is very close to what we are looking for: It manages multiple clusters of OpenShift on different infrastructures using a central tooling (Red Hat Advanced Cluster Management) and Applications in a declarative way.

In order to protect secrets it makes use of a central vault that stores all necessary keys and passwords.

Applications can be added via helm charts or kustomize templates and configured centrally. The rollout will then be completely automated and all configuration will be held in sync by making use of ArgoCD with the OpenShift GitOps Operator. Everything needed can be found as Open Source code in the GitHub repository https://github.com/validatedpatterns/multicloud-gitops .

Prerequisites

If we want to deploy this pattern we need to have some prerequisites fulfilled:

- Since we want to use GitOps we obviously need a git repository. For simplicity, we assume a repository on github.com, so it will be very easy to fork the existing repo and take it from there. But in general, any git repository will do, of course also private repos that are not connected to the internet.

- We will also need at least one OpenShift cluster that is acting as the hub cluster. More clusters can be added as needed, all will then be centrally controlled from the hub cluster. Here, any OpenShift cluster will do, for size recommendation have a look at https://validatedpatterns.io/learn/ocp-cluster-general-sizing/ The patterns are tested and validated on AWS and bare metal clusters though.

- For the local administration a Linux based workstation (a VM will do) that can use git and podman containers locally is necessary.

Implementation

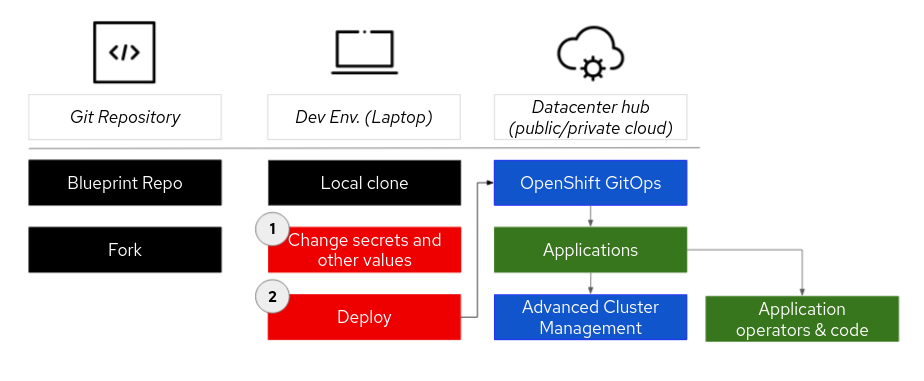

The general workflow to deploy a pattern is to fork the repo into a private repository and make local changes there.

The actual deployment can be started from the command line with a script in the local copy of the repo or from the hub cluster using the Validated Pattern Operator. This Operator can be installed from the Operator Hub in the management console of the OpenShift Hub cluster.

The general structure can be seen in this picture:

Installation via the Operator is described here:

https://validatedpatterns.io/learn/using-validated-pattern-operator/

Installing via command line is described here:

https://validatedpatterns.io/patterns/multicloud-gitops/mcg-getting-started/

This procedure will take some time and deploy a number of operators, utilities and demo applications on the hub cluster.

Exploring the deployment

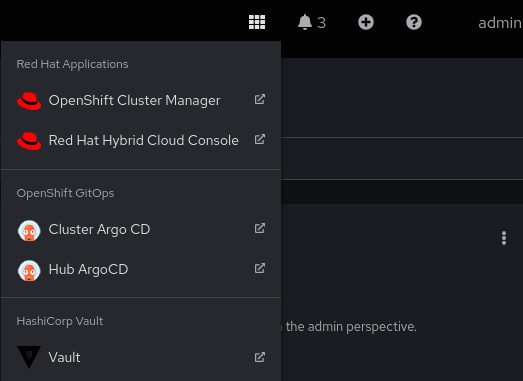

After this is finished, the 9-dots-menu in the Openshift cluster now contains a vault and two ArgoCD instances:

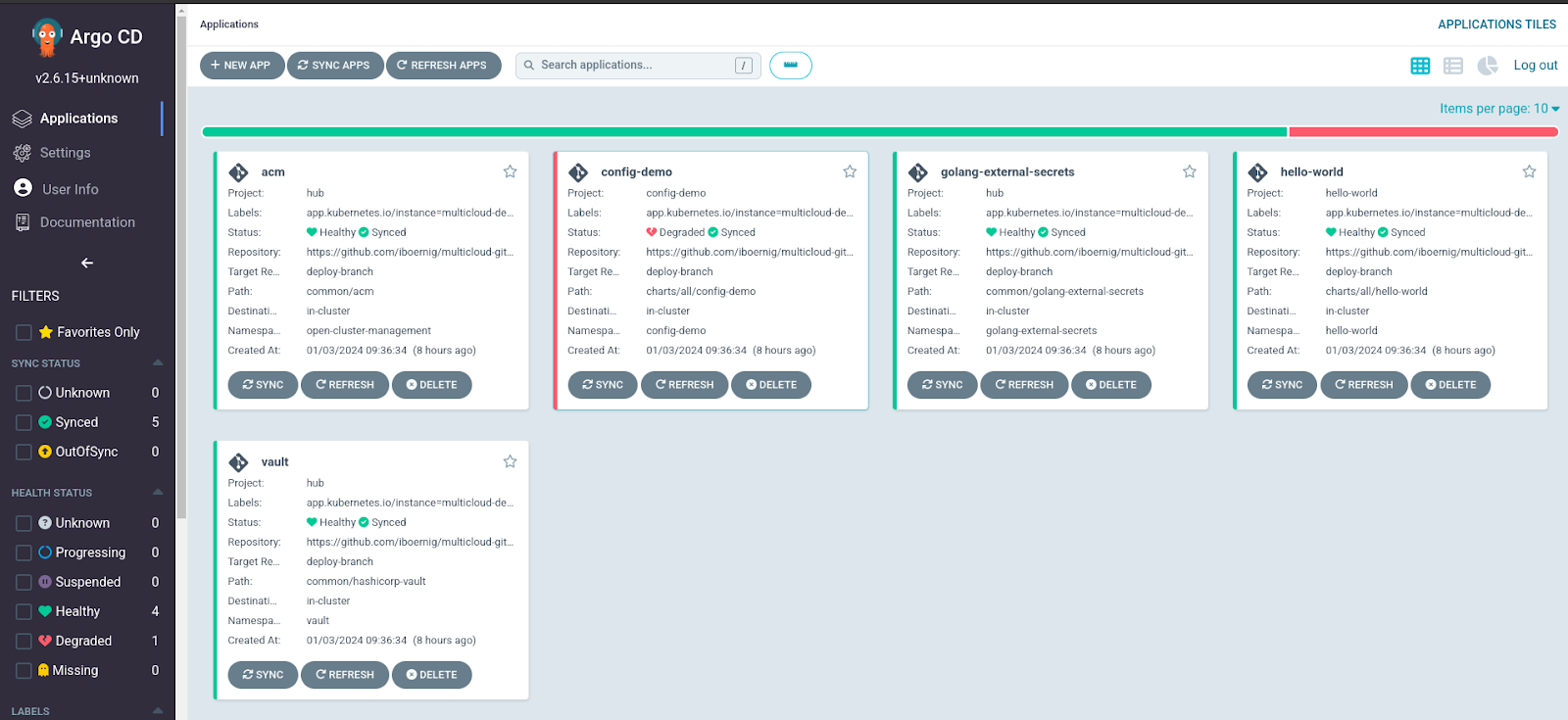

Opening the hub ArgoCD it will show these five managed applications:

Red Hat ACM (Advanced Cluster management), a config demo, which is degraded (we will come to this later), an external secrets operator, a hello-world demo application and a secrets vault.

A simple application

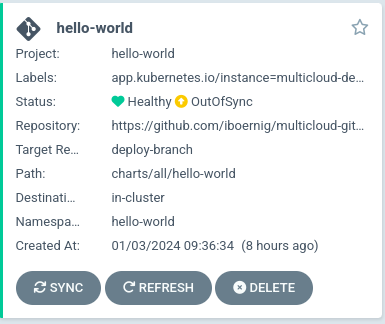

Let’s first have a look at the hello world application:

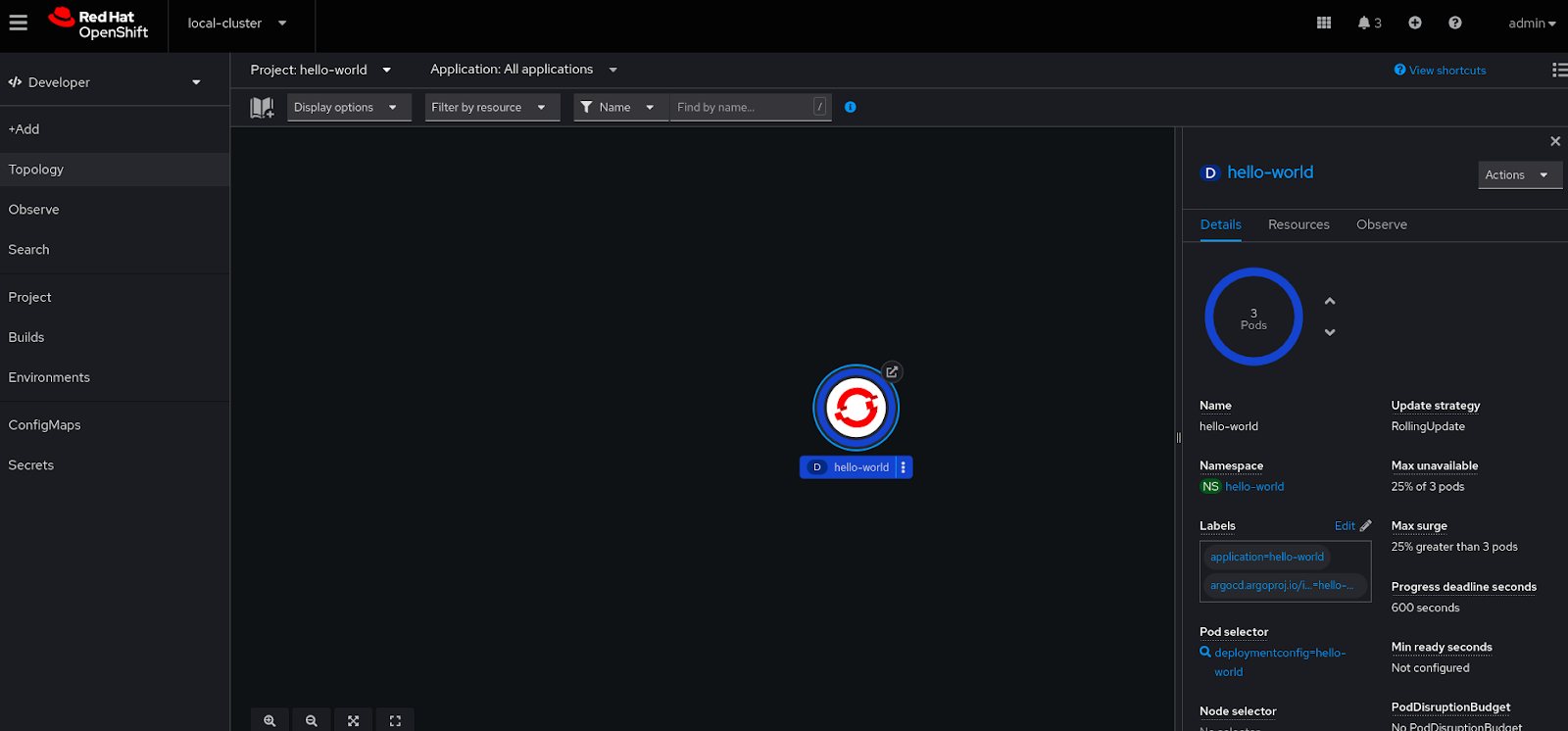

In the Openshift “hello-world” namespace we find a simple web service:

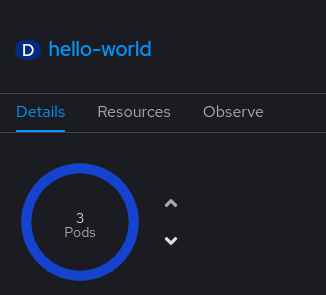

Three pods are deployed to serve the simple web application:

This looks good!

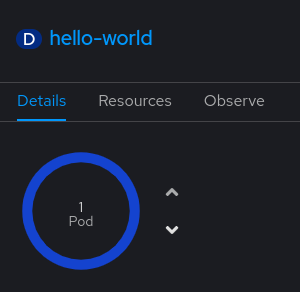

We can also see the beauty of the GitOps approach: Let us change the deployment locally by reducing the number of pods in the Openshift console:

ArgoCD automatically detects an out-of-sync status:

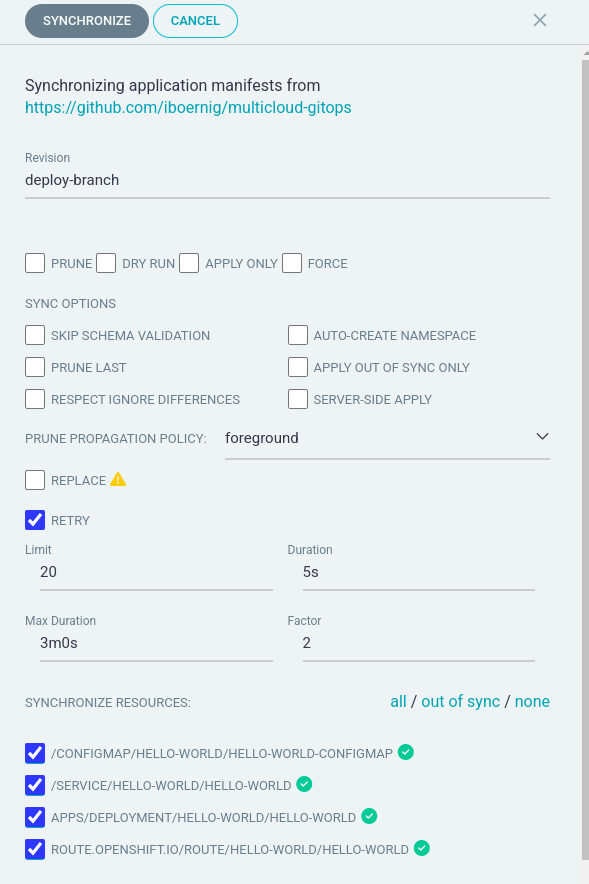

We now can centrally resync the deployment by selecting “sync” in the ArgoCD interface:

After pressing “Synchronize” the original configuration is restored:

We could even let ArgoCD automatically do the resync, if we feel lucky!

An application with secrets

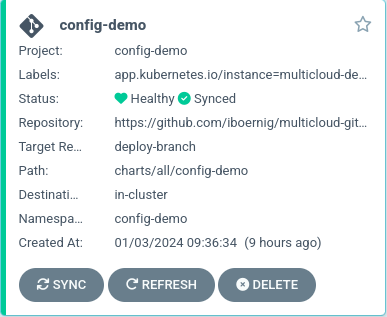

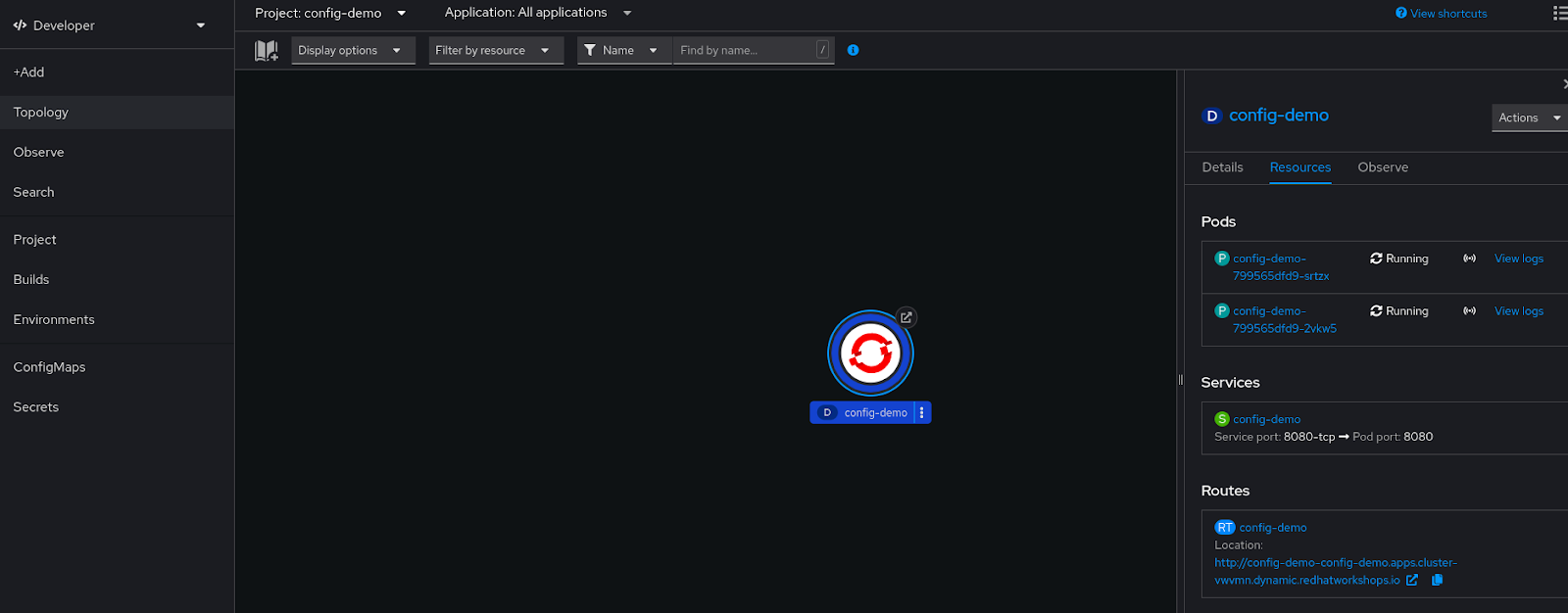

Now we should have a look at the degraded config-demo application:

It is degraded because of a missing secret that needs to be provided. From the instructions we know that the secrets should never come from the git repository, but rather be safely stored in the vault.

To load the contents of the local values-secrets.yaml file into the vault, we again will use the pattern.sh script:

$ ./pattern.sh make load-secrets

[...]

TASK [vault_utils : Loads secrets file into the vault of a cluster] **********************************************

skipping: [localhost]

PLAY RECAP *******************************************************************************************************

localhost : ok=23 changed=3 unreachable=0 failed=0 skipped=5 rescued=0 ignored=0

This adds a secret to the vault and allows the config-demo app to proceed. After a few seconds, we see that the status changed to healthy:

In the Openshift console we can see that the application has finished the depoyment:

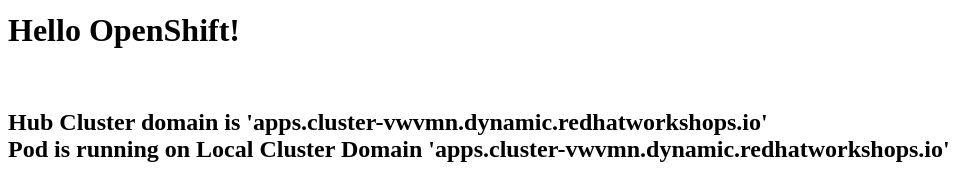

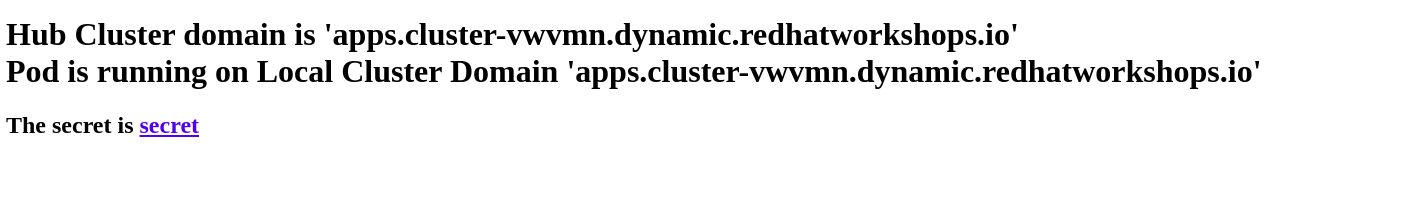

And the link shows the running application:

Even the loaded secret can be revealed by following the link.

This demo shows how secrets should be handled in a GitOps environment, where these secrets should never be managed in git, for obvious reasons! Even when the git service is a private one that is managed internally, it is a bad idea to store passwords there.

Summary

We have learned that the Validated Pattern for Hybrid Multicloud Management provides a framework to deploy applications and application secrets consistently on different clouds and infrastructures using OpenShift and the GitOps paradigm. It comes with tooling and automation to manage a large number of deployments with minimal effort.

Outlook and Adoption

What does it need to adopt this for my organization and my application? We still need to integrate our own application. The simple hello-world and config-demo apps are packaged as helm charts. We could also use helm for our application. But the framework does also work with kustomize templates. The next step would be to look at the structure of the repo and see how we could add our own app. And maybe we will see another post on how to do this in the future!