Today more than ever availability and performance depend on resilience against increasingly frequent and powerful attacks on the confidentiality, availability and integrity of information and IT systems. It is not just that our systems are being threatened with continuous automated attacks, but also with highly sophisticated approaches, such as Advanced Persistent Threats (APTs), Ransomware, Side Channel Attacks or Supply Chain Exploitations.

Evolution of threats

As more and more IT systems get automated and dynamically reconfigured, also the information or infrastructure objects which need to get protected easily tend to move out of sight and adjustments of security measures to continuously changing information systems certainly becomes a challenge.

In this article, we’re going to dive a bit deeper into the security aspects of cloud computing in general and in particular container platforms like Red Hat OpenShift. We’re having a look at what the major threats are, and how to take advantage of certain aspects of cloud computing and containers to create a necessary level of security.

The Scenery

Beyond the dynamic behavior mentioned above, modern cloud architectures always implement shared resource systems, where resources need to be protected against each other in terms of unauthorized access and manipulation. Which again is the fundament for implementing tenant separation and isolation on business level.

In the past, many manual steps have been required to configure firewalls, access rights, crypto functions, monitoring, integration in SIEM systems and so on. As resources are now being provisioned dynamically, also security configuration needs to be adapted on-the-fly.

Consequently, general security principles such as overall hardening, Zero-Trust, Need-to-know or Least-privilege gain significance, exactly because they have a general effect – widely independent of any individual provisioning. Also, AI/ML based security plays an increasingly important role in order to create baseline security even with respect to zero-day attacks. Remediation of detected new or not yet handled vulnerabilities more and more becomes subject to automation. Not just the IT systems, but also the whole application life cycle needs to be protected against the appearance of new vulnerabilities, manipulation, outages and disclosure of secrets.

Another problem which becomes more and more visible, is that it is not sufficient to just secure the border of an information system (a traditional approach which is known as Perimeter Security). It becomes obvious that attacks can not just happen from the outside, but also from inside a security zone – independent on how good it is secured against external attacks. Even more this applies to cloud platforms, where different security demands for individual applications and services coexist side by side on the same platform.

Physical vs. Logical

In fact, protecting a large resource pool for shared usage has some history. In the early days, when hardware was the determining cost factor, it was much more economic to utilize a mainframe architecture with shared resources instead of giving each task and each user its/his/her own system (with at the end of the day 90% idle time, by the way). Though client-server probably is the least economic way to utilize IT resources, separating users is a relatively easy task – as every user or server has its own hardware, you primarily need to perform isolation on network level (called “physical“ separation – simply because you can always draw the plug).

Today, we build most of the functionality for resource sharing and quotas in a logical way, rather than physical (or, in better words, dynamically and software-defined instead of statically and manually). However, especially with respect to isolation issues, so-called Confidential Computing with hardware supported Trusted Execution Environments or Enclaves currently aims to rebuild what we already find within the mainframe architectures.

Challenges and Solutions

Now common security standards and frameworks try to give guidance on security methodology and implementation. Following the standards and utilizing the frameworks greatly simplifies security and often allows certification and accreditation of whole information systems. However, even those standards and frameworks have to cope with a change towards highly dynamic development of technology on one side and new, sophisticated threats on the other.

New technologies such as cloud and containers inevitably also come with new vulnerabilities. But at the same time new technology also helps avoiding old vulnerabilities and offers new ways to implement security on an even higher level than before. Knowledge of those new vulnerabilities and how to leverage the advantages of a new technology is key to success with security in the era of cloud computing.

It is obvious that security is not going to lose complexity, and the more security a cloud platform already implements and provides out of the box, the easier it is to perform the necessary tasks to make a system secure and resilient, and the more trusted the platform will be.

Fortunately Open Source already brings in a couple of features that make information systems even more secure. Don’t get me wrong, it may happen with Open Source as well as with Closed Source that when a certain level of accuracy and knowledge is missing, you will certainly be at risk. But with Open Source, you have the chance that many more eyes are involved in a peer process, that the code stays open for audits any time even without Escrow, that proven code can be easily reused, and that you even can try to find and use fixes when there is no official one provided in time.

We now try to show what the main threats are, how Red Hat’s OpenShift Container Platform (OCP) together with Advanced Cluster Security (ACS) and Advanced Cluster Management (ACM) addresses these threats while at the same time offering as much convenience as possible (yes, also convenience is important for security – if counter measures become too complex so that they will not be implemented, new risks arise which are not going to be properly addressed). But security in total and container security in particular stays a complex topic, which easily breaks the limits of a small article. So we’ll try to keep the overall level high.

But isn’t Kubernetes as a proven and mature technology secure on its own? Do we need additional security features or controls in order to achieve the desired level of security?

Well, OpenShift has a quite long history with security, and many of the security principles that have been first developed with OpenShift later made their way into the Kubernetes main stream. Just recently, OpenShift got complemented with former Stackrox, now Advanced Cluster Security for runtime and DevOps security. Kubernetes already has a lot of features built-in, and many go back to Red Hat’s engagement for a secure Kubernetes. But Kubernetes is just an (important) building block for a container platform, which requires many more components for networking, logging, monitoring, metrics, deployments and so on. Which again requires a holistic approach covering all the other components which finally make up the container platform. It is a bit like trying to secure a Linux system by reducing the focus to the kernel only.

Also, security is not just software, but also a matter of configuration and processes. Which again are mostly defined as policies. A platform needs to be maintained and integrated into the overall security architecture. And this is exactly what OpenShift does. We have learned that establishing even baseline security out-of-the-box just with vanilla Kubernetes is not an easy task, and needs to be maintained manually for every additional component, and over every update.

Baseline Security

As already mentioned above, in terms of establishing security there are several standards and frameworks, which in general also provide best practices in how to implement overall information security management as well as for defined information systems of individual security objects. All of those best practices follow an analytic approach, starting with describing what shall be secured, what kind of security level needs to be achieved, what the main threats are and which resulting risks need to be addressed and mitigated.

We don’t want to offer a primer here on how to perform all those steps, but just take with you that security is always a question of identifying and balancing risks. There is no 100% security (because it can’t be paid and is not possible anyway). So the proper balance needs to be found, which is a creative and responsible task. And the more security a system already provides out-of-the-box in a convenient way, the easier the security goals can be met.

Creating security is always bound to the business application and the nature of the data which is processed in that application. This is because before you design a security system, you need to figure out whether the business application is at risk, and how much damage will result if one or more of the security targets are affected. This always is an analytical approach. Now, in order to make things easier, security engineering tries to group all measures which are required for obtaining a general level of security, and reduce individual assessments to what is really specific. This general level of security is also called baseline security, and is especially useful with platform architectures.

With Security-by-default and Security-by-design, a certain level of security is already implemented within a standard OpenShift installation. Certificate management is set up, as well as data encryption for storage and communication. During the installation process, you should enable FIPS mode (to activate only crypto algorithms validated by FIPS) and also enable disk encryption and encryption of the etcd configuration database. Creating a new project also creates a new tenant with its own security context, so that only those access points and rights are set, which are required for proper operation. More on OpenShift security can be found in the OpenShift Security Guide [1].

Tenant separation via OpenShift projects (Source: Red Hat)

But there are still things to do. There of course is official documentation available on how to manage security and compliance [2]. And for your convenience, there is a ready-to-use, dedicated benchmark from the Center of Information Security (CIS-benchmark) for OpenShift [3]. The CIS Benchmark is recognized as best practice and recommended within many security frameworks. You of course also can use the standard CIS benchmark for Kubernetes, but the dedicated OpenShift benchmark goes beyond and is specially adopted for the features of OpenShift. You can run that benchmark using the Compliance Operator provided by OpenShift as often as needed, in order to make sure the configuration still matches the recommendations for baseline security.

To go even further you may use the Compliance Operator to check a configuration against the policies of a special security standard or framework such as FedRamp, PCI-DSS or E8. It can’t be easier to document standard compliance even over updates or other changes.

Day2

Traditionally, most operational processes are performed on a planned basis. Backups are normally done in regular intervals, as well as other maintenance tasks. Updates are usually a major effort and also require appropriate planning and implementation.

Often it is not easily possible to extend this approach to a highly dynamic environment. OpenShift uses the concept of so-called Operators to automate as many tasks as possible. An Operator can be thought of as some kind of automated operation manual. It does all what formerly has been described in operating manuals – and more. Every maintenance task within OpenShift itself is done by Operators, and Operators also are the recommended way to implement security controls, since they effectively prevent individual mistakes and can be validated and audited.

As every software system, also OpenShift needs continuous patching and regular updates. Reason behind this is that every newly discovered vulnerability needs to be handled and of course attackers are continuously improving their methodology sets. Old and unpatched software releases increase the risks for new unhandled vulnerabilities, so it is important to apply patches and updates as soon as they are available, ideally as part of a fully automated process. OpenShift uses Operators for patching and updating its own components, as well as for applications and services running on top of OpenShift.

Other OpenShift Operators care for automated compliance checks (as often as needed) with the Compliance Operator and for asserting the integrity of the platform with the File Integrity Operator. Security would not be complete without audit logs, providing the necessary information to perform further analysis as part of troubleshooting or forensics. Openshift provides several selectable audit log policies. The Machine Config Operator performs all necessary OS based configuration changes.

Baseline security often requires air-gapped environments or environments disconnected from the Internet. This is especially true for production environments. OpenShift offers full support for installation and maintenance of an air-gapped and disconnected platform. All updates can be downloaded from Red Hat from a separate domain and applied locally.

OpenShift and Virtualization

There is often the question whether the security aspects with containers are the same as with classic virtualization. The proper answer is that yes, there are lots of similarities, but there is much more with containers, simply because containers are not just a technology, but a new paradigm on how to develop and deploy and maintain software. The main difference probably is that (hardware) virtualization mimics a logical system for every guest, with shared processor and memory and I/O, but completely separated software. Containers on the other side of course share processor and memory and I/O, but also the operating system’s kernel, so that any change in the kernel immediately impacts all containers running with that kernel.

However, since containers provide a much more lightweight mechanism for workloads than virtual machines, they can be used for services with rather short lifetimes, which again limits the opportunity for exploiting corrupted workloads for a longer period of time. Once run, container workloads will not be altered, but rather rebooted with every patch or update. So containers work in a way comparable to the workplaces in internet cafés, which do a clean reboot every night so that it doesn’t matter that much when one of the workplaces gets corrupted.

So in terms of separation of execution environments, traditional hypervisors have some advantages against containers. Quite early the idea came up to combine the lean footprint of containers with the better isolation of hypervisors. A well known example is Kata Containers where with every execution of a container also a new virtual machine is started where the container is deployed to. Now some argue that this contradicts the advantages of containers in terms of footprint and flexibility. Anyway, in certain situations it seems reasonable to utilize hypervisors as a “second defense row” for obtaining higher security levels where required, and running containers the “normal” way where normal security levels need to be matched, without losing the consistent way for deploying and managing workloads in containers.

In fact, with the current OpenShift there are three deployment choices side by side, one is to deploy into traditional virtual machines running inside of containers (which is called OpenShift Virtualization and based on the Kubevirt technology), another is to deploy directly into Linux containers (classical OpenShift approach), and a third is to deploy into containers running inside traditional virtual machines (which is called Sandboxed Containers and based on Kata Containers technology). All three methods are managed by OpenShift homogeneously in the same project and workload centric way, utilizing the same networking and storage functions. As a consequence, you can choose the deployment method which fits best with your security requirements.

More on Defense Lines

Another second line of defense with OpenShift is what we call Mandatory Access Control (MAC) with SELinux as standard implementation. The idea behind SELinux is to act as a kind of firewall towards the Kernel system, including the system calls. Every Kernel resource is labeled and access can be switched on or off for containers sharing that Kernel.

Compromised container attacking other containers, control plane and host system

Same mitigated with mandatory access control

Third line of defense is the underlying operating system, the run time of the container node. OpenShift uses either Red Hat Enterprise Linux (RHEL) or Red Hat CoreOS, a stripped down and specially hardened RHEL variant with minimized attack surface and optimized for containers. RHEL is one of the favorite operating systems for applications where security matters because of its elaborate security features and ecosystem.

With OpenShift there is also another optional line of defense. Which is utilizing IBM Z/LinuxOne architecture with its sophisticated EAL5+ certified mainframe security technology to run OpenShift on top.

Sometimes it is of relevance, where exactly a workload is running. Which means on which node, which rack and possibly in which fire zone or in which data center. Idea behind is that certain security properties of a node, rack, fire zone (room protection) or data center (geo redundancy) can automatically be leveraged.

OpenShift handles the runtime environment for every workload according to the policies of the user. So if a security concept requires workload separation, OpenShift already provides everything you need. The smallest item is the pod. Pods are organized in namespaces or projects. Projects again are located in clusters, and clusters are running on nodes. With OpenShift almost any assignment of pods, projects, clusters and nodes can be defined, monitored and enforced.

In fact it is one of the major advantages of OpenShift over just Kubernetes that OpenShift implements multi-tenancy by the means of projects. Each project has its own namespace, and OpenShift does all that is needed to configure the tenant to be effectively isolated from other tenants. Through the full stack, including RBAC and micro segmentation of the software defined network. Once you need to make changes, you can just apply them to projects, and again OpenShift takes over the remaining tasks.

Now consider you have thousands of workloads, running on hundreds or even thousands of nodes and several clusters. How do you still monitor what’s going on, and whether a situation needs action or not? Imagine that a new vulnerability has been identified for a special version of some component (e.g. Log4J). How is that managed in OpenShift?

The first thing is to check whether OCP itself is affected. Security, bug fix, and enhancement updates for OCP are released as asynchronous errata through the Red Hat Network, as they are handled separately from the standard planned update lifecycle. All OCP errata is available on the Red Hat Customer Portal, and can be subscribed for immediate notification.

Latest upon notification the images in the registry should be scanned whether they still contain the Log4J vulnerability and need to be patched, especially those which are currently in use. This can be done easily with OpenShift’s Container Security Operator (CSO) via Quay’s Clair scanner or via Advanced Cluster Security for Kubernetes (ACS). Now you may inspect the vulnerabilities and decide how to deal with them. Normally you will update the affected software component and reboot pods with the new image, but OpenShift also can immediately stop all affected containers upon request.

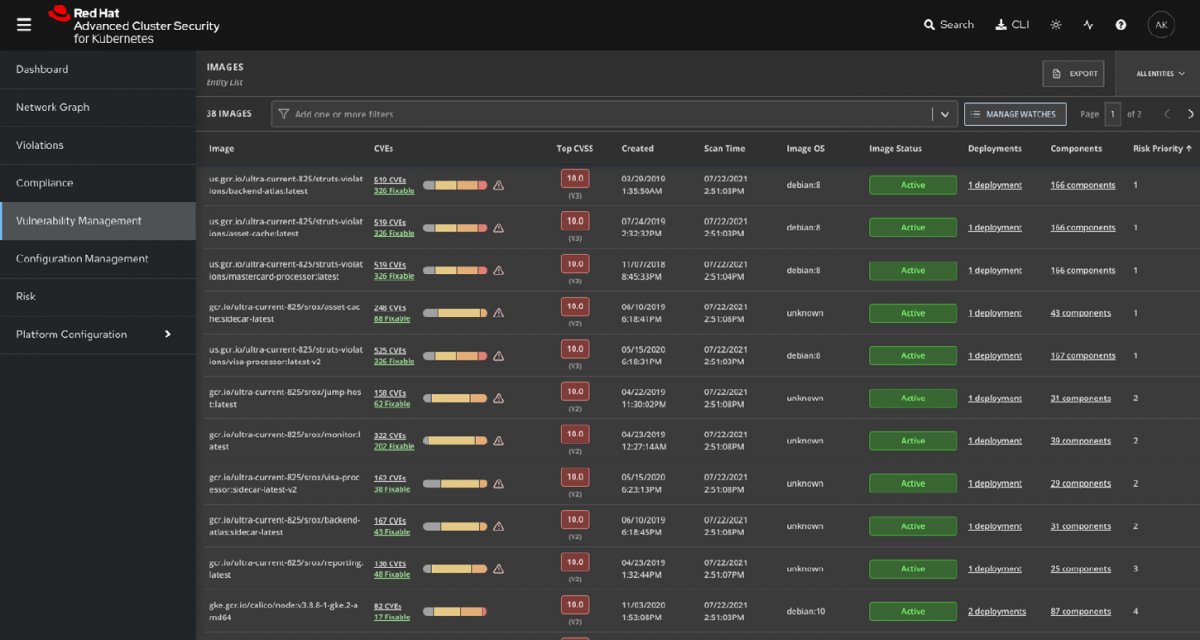

With ACS, you have even more options, you can for instance scan over all of your clusters, directly check for new image releases where the vulnerability has been fixed, and replace the affected images. ACS also scans applications, infrastructure and nodes on demand, creates vulnerability reports and does a risk assessment for all vulnerabilities for prioritization, and much more. And with Advanced Cluster Management (ACM) you may even specify appropriate remediation procedures which shall be applied across the whole cluster landscape if certain policies get violated.

Content

Now if the platform gets properly secured, there is still the question whether we want to allow every kind of workload running on that platform. It might seem from the platform perspective that it doesn’t matter for the platform whether the workload is validated and trusted or not, since the platform is robust enough to keep every workload inside its borders. In fact there are several reasons why it is of relevance what kind of workload is going to be deployed.

One reason is that of course the user expects a working application without vulnerabilities affecting the business security objectives, such as availability, integrity and confidentiality of information. A platform with all of its applications and services – and especially a PaaS – appears to the user as a single pane of glass. The user cannot make a difference between the container platform and the workloads running on that platform. He or she will either trust the whole platform, or not. That by the way is the reason why security concepts are almost always done from the application perspective, drilling down through the stack to find threats and appropriate countermeasures. Another reason why workloads matter is that a “rogue” container may still harm the whole system, if it finds a way through the security architecture, for example via a yet unknown vulnerability.

Consequently, it should be controlled what kind of workload is running on the platform. Appropriate policies can check whether a container shall be granted root privileges, whether worklads can be run which still incorporate vulnerabilities, whether workloads can acquire more resources than they have originally claimed, whether container images apply to a certain patch level or need to be signed by the developer or validated by the provider and so on. Finally, it should be clearly defined what happens if any of the policies get violated.

Most of those aspects can be implemented automatically. OpenShift already does most of the job. To make it even more handy, OpenShift implements the so-called Security Context Constraints (SCCs), which provide typical policy profiles for different classes of containers.

For composing new applications it is normally useful to add certain standard functions such as OS features, runtimes, libraries or general services. OpenShift can utilize a large number of pre-validated, scanned, approved, certified and signed container images which also includes a universal base image for RHEL. Utilizing pre-validated container images not only speeds up application development, but also helps to keep the overall security level high.

Life Cycle and Runtime

As mentioned above, content policies should be part of a holistic security approach. Vulnerabilities in content as well as in system software play an increasingly important role in security architectures. More and more exploits are based on weaknesses which have their cause in insecure development processes, be it that trojans get imported as part of an insecure supply chain, proper coding rules are not in place or insecure libraries and services are used. Consequently, the focus of a security architecture needs to be expanded from just the operations environment to also include the design, development and test process. It needs to be “shifted left” with respect to cover development, test, deployment and operations over the full application lifecycle.

OpenShift follows that concept with Advanced Cluster Security for Kubernetes (ACS). With ACS, it is possible to control both development and runtime processes via policies, static analysis, monitoring and vulnerability scanning.

Advanced Cluster Security for Kubernetes architecture (Source: Red Hat)

ACS is capable of controlling multiple clusters (independent of Red Hat Advanced Cluster Management), and uses policies to define the secure to-be state and to detect violations against that state, plus what shall happen if a violation occurs. ACS already provides many standard policies which can be used as-is or tailored to individual security demands.One of the most used features of ACS is to prevent running container images which do not match certain security policies such as being scanned with positive outcome or not being scanned for a certain time or containers with writable root file systems from being deployed. Many of those policies are key to achieve compliance with common security standards and frameworks. In order to track compliance, ACS provides out-of-the-box a number of security profiles for several common security standards, such as CIS, HIPAA, NIST Special Publication 800-190 and 800-53 and PCI-DSS. Results can be browsed and examined in the ACS dashboard. Also, ACS helps in automatically identifying and rating risks for the overall risk analysis.

ACS dashboard (Source: Red Hat)

For controlling the full life cycle, ACS integrates into

- image registries such as Quay,

- CI systems like Jenkins or CircleCI,

- Issue trackers like Jira,

- cloud management solutions such as PagerDuty or Splunk,

- Hyperscaler management layer like AWS,

- communication frameworks like Slack channels for alerting as well as

- SIEM systems such as Sumo Logic.

Securing the development process with OpenShift (Source: Red Hat)

To sum it up, ACS uses the build and automation features of OpenShift to implement security with a scope that goes far beyond traditional security architectures, with the ease of establishing continuous compliance [4].

Beyond OpenShift

As OpenShift already provides all necessary security features for baseline security as well as for increased security demands, there might still be the need to cope with IT infrastructure outside of the OpenShift automation scope.

Ansible can be triggered from OpenShift to handle things in a secure and consistent manner, e.g. to configure security infrastructure or for remediation of outdated software packages or for infrastructure vulnerabilities as part of a Security Orchestration And Automated Response (SOAR) strategy.

Another point of concern can be APIs which get exposed by services which are run on the OpenShift platform. You might require access to those APIs to be registered and restricted, which can easily be done with 3scale API management.

Security Information & Events Management (SIEM) systems still play an important role for collecting and analyzing events with security relevance.

Conclusion

Cloud computing brings new aspects in designing IT security architectures. On one side, there are new challenges. Security never had been a static issue, but with cloud computing, a highly dynamic environment of shared resources needs to be secured. On the other hand, new security concepts allow achieving new levels of security, with the utilization of layered security, security automation, AI/ML, DevSecOps and policy based governance.

OpenShift does not only provide a state-of-the-art platform for cloud computing and especially containers, but also for establishing modern security concepts with full integration into existing and future security management environments.

Securing cloud computing means not just replicating common security patterns which once have been developed for client-server computing, but rather rethinking security with respect to new and powerful threats by leveraging powerful features implemented in platforms like OpenShift.

Links

[1] OpenShift Security Guide:

https://www.redhat.com/en/resources/openshift-security-guide-ebook

[2] Managing Security and Compliance for OpenShift Container Platform:

https://access.redhat.com/documentation/en-us/openshift_container_platform/4.10/pdf/security_and_compliance/openshift_container_platform-4.10-security_and_compliance-en-us.pdf

[3] OpenShift CIS Benchmark:

https://access.redhat.com/solutions/5518621

[4] Advanced Cluster Security for Kubernetes: