Introduction

When you are serious about running a microservice architecture at scale, you will definitely run into some challenges at some point. Beside all the benefits microservices deliver, they’ll also add a layer of complexity and have all the associated complexities of a distributed system.

And as the number of services scales, it becomes increasingly difficult to understand the interactions between all of these services. Finding and tracing errors or latencies can become quite a hard job. Rolling out new services in a secure and reliable fashion can also cause some struggle for dev and ops teams.

Red Hat OpenShift Service Mesh addresses a variety of challenges in a microservice architecture by creating a centralized point of control in an application. It adds a transparent layer to existing distributed applications without requiring any changes to the application’s code.

OpenShift Service Mesh is based on the open source Maistra project, an opinionated distribution of Istio designed to work with Openshift. It combines Kiali, Jaeger, and Prometheus into a platform managed according to the OperatorHub lifecycle.

OpenShift Service Mesh can do a lot of things. The number of features and capabilities can be a bit overwhelming, but at its core it provides the four main things:

- Traffic management

- Telemetry and Observability

- Service Identity and Security

- Policy enforcement.

Service Mesh Federation

OpenShift Service Mesh 2.1 introduced a new feature “Federation”, which is a deployment model that lets you share services and workloads across separate meshes managed in distinct administrative domains.

The OpenShift ServiceMesh Federation feature is different from upstream Istio. One of these differences is its multi-tenant implementation, where a cluster may be divided into multiple “tenant” service meshes. Each tenant is a distinct administrative domain and can be in the same or different clusters. Upstream Istio assumes that one cluster is one tenant and that the communication relies on direct connectivity between the Kubernetes API servers.

Federation enables use cases like:

- Sharing services in a secure way across different meshes and providers

- Advanced Deployment strategies

- Cross-region High Availability

- Supporting Migration scenarios

Example Use Case: Testing with production data

Here is what we want to set up: We are going to create a production service mesh, running our production workload (the world famous Istio bookinfo application).

Then we will set up a stage mesh tenant within the same OpenShift Cluster, where we will deploy and test the new version of the bookinfo’s details microservice. We will also want to test the functional behavior as well as the performance with data from production. In order to achieve that, we will first federate the two meshes, export the new version of the details-service from the stage environment, import it in the production mesh and create a VirtualService mirroring all traffic to the new version in the stage environment.

You find all the sources and a deploy script in this Github repository: https://github.com/ortwinschneider/mesh-federation

Let’s get started!

OpenShift ServiceMesh Installation

We need at least one OpenShift cluster where we install OpenShift ServiceMesh 2.1+. Basically we have to install 4 operators in the following order:

- OpenShift Elasticsearch (Optional)

- Jaeger

- Kiali

- Red Hat OpenShift Service Mesh

You’ll find the complete installation instructions here: Installing the Operators – Service Mesh 2.x | Service Mesh | OpenShift Container Platform 4.9

Installing the production Service Mesh

First we login to OpenShift and create a new project for the production mesh controlplane and the bookinfo application.

oc new-project prod-mesh

oc new-project prod-bookinfoCode language: Bash (bash)The Interaction between meshes is handled through ingress and egress gateways, which are the only identities transferred between meshes. You need an additional ingress- and egress-gateway pair for each mesh you are federating with. The gateways in each mesh must be configured to communicate with each other, whether they are in the same or in different clusters.

- All outbound requests to a federated mesh are transferred through a local egress gateway

- All inbound requests from a federated mesh are transferred through a local ingress gateway

Therefore we have configured additional ingress- and egress gateways in the ServiceMeshControlPlane Custom Resource.

apiVersion: maistra.io/v2

kind: ServiceMeshControlPlane

metadata:

namespace: prod-mesh

name: prod-mesh

spec:

cluster:

name: production-cluster

addons:

grafana:

enabled: true

jaeger:

install:

storage:

type: Memory

kiali:

enabled: true

prometheus:

enabled: true

policy:

type: Istiod

telemetry:

type: Istiod

tracing:

sampling: 10000

type: Jaeger

version: v2.1

runtime:

defaults:

container:

imagePullPolicy: Always

proxy:

accessLogging:

file:

name: /dev/stdout

gateways:

additionalEgress:

stage-mesh-egress:

enabled: true

requestedNetworkView:

- network-stage-mesh

routerMode: sni-dnat

service:

metadata:

labels:

federation.maistra.io/egress-for: stage-mesh

ports:

- port: 15443

name: tls

- port: 8188

name: http-discovery #note HTTP here

additionalIngress:

stage-mesh-ingress:

enabled: true

routerMode: sni-dnat

service:

type: ClusterIP

metadata:

labels:

federation.maistra.io/ingress-for: stage-mesh

ports:

- port: 15443

name: tls

- port: 8188

name: https-discovery #note HTTPS here

security:

trust:

domain: prod-mesh.local

Code language: YAML (yaml)Some things to note here:

In our ServiceMeshControlPlane resource, we need to configure the trust domain as it will be referenced by the other mesh to allow incoming requests. We also want to view endpoints in Kiali associated with the other mesh network. So the field requestedNetworkView must be network-<ServiceMeshPeer name>. The routerMode should always be sni-dnat. And the port names must be tls and http(s)-discovery. Symmetrically, this configuration must also be done on the stage-mesh controlplane.

Now we make the prod-bookinfo project a member of the mesh by using a ServiceMeshMemberRoll resource.

apiVersion: maistra.io/v1

kind: ServiceMeshMemberRoll

metadata:

name: default

namespace: prod-mesh

spec:

members:

- prod-bookinfoCode language: YAML (yaml)Let’s apply both resources and optionally wait for the installation to complete. This will set up the complete controlplane with Jaeger, Kiali, Prometheus, Istiod, Ingress-, Egress-Gateways etc.

oc apply -f prod-mesh/smcp.yaml

oc apply -f prod-mesh/smmr.yaml

oc wait --for condition=Ready -n prod-mesh smmr/default --timeout 300sCode language: Bash (bash)We are ready to deploy our “production” workload, the bookinfo application with Gateway, VirtualService and DestinationRule definitions .

oc apply -n prod-bookinfo -f https://raw.githubusercontent.com/Maistra/istio/maistra-2.0/samples/bookinfo/platform/kube/bookinfo.yaml

oc apply -n prod-bookinfo -f https://raw.githubusercontent.com/Maistra/istio/maistra-2.0/samples/bookinfo/networking/bookinfo-gateway.yaml

oc apply -n prod-bookinfo -f https://raw.githubusercontent.com/Maistra/istio/maistra-2.0/samples/bookinfo/networking/destination-rule-all.yamlCode language: Bash (bash)We can check if all pods are running with the sidecar injected. You should see 2/2 in the Ready column.

oc get pods

NAME READY STATUS RESTARTS AGE

details-v1-86dfdc4b95-fcmkq 2/2 Running 0 2d20h

productpage-v1-658849bb5-pdpnq 2/2 Running 0 2d20h

ratings-v1-76b8c9cbf9-z98v6 2/2 Running 0 2d20h

reviews-v1-58b8568645-dks9d 2/2 Running 0 2d21h

reviews-v2-5d8f8b6775-cb8df 2/2 Running 0 2d20h

reviews-v3-666b89cfdf-z9r5d 2/2 Running 0 2d20hCode language: Bash (bash)Installing the staging Service Mesh

After installing the production controlplane and workload, it’s time to set up the stage environment. We are following the same steps as we did with the production mesh. First we create the projects:

oc new-project stage-mesh

oc new-project stage-bookinfoCode language: Bash (bash)Next we install the stage-mesh controlplane in the stage-mesh project and add the stage-bookinfo project to the mesh:

apiVersion: maistra.io/v2

kind: ServiceMeshControlPlane

metadata:

namespace: stage-mesh

name: stage-mesh

spec:

cluster:

name: stage-cluster

addons:

grafana:

enabled: true

jaeger:

install:

storage:

type: Memory

kiali:

enabled: true

prometheus:

enabled: true

policy:

type: Istiod

telemetry:

type: Istiod

tracing:

sampling: 10000

type: Jaeger

version: v2.1

proxy:

accessLogging:

file:

name: /dev/stdout

runtime:

defaults:

container:

imagePullPolicy: Always

gateways:

additionalEgress:

prod-mesh-egress:

enabled: true

requestedNetworkView:

- network-prod-mesh

routerMode: sni-dnat

service:

metadata:

labels:

federation.maistra.io/egress-for: prod-mesh

ports:

- port: 15443

name: tls

- port: 8188

name: http-discovery #note HTTP here

additionalIngress:

prod-mesh-ingress:

enabled: true

routerMode: sni-dnat

service:

type: ClusterIP

metadata:

labels:

federation.maistra.io/ingress-for: prod-mesh

ports:

- port: 15443

name: tls

- port: 8188

name: https-discovery #note HTTPS here

security:

trust:

domain: stage-mesh.local Code language: YAML (yaml)apiVersion: maistra.io/v1

kind: ServiceMeshMemberRoll

metadata:

name: default

namespace: stage-mesh

spec:

members:

- stage-bookinfoCode language: YAML (yaml)Let’s apply both resources and optionally wait for the installation to complete.

oc apply -f stage-mesh/smcp.yaml

oc apply -f stage-mesh/smmr.yaml

oc wait --for condition=Ready -n stage-mesh smmr/default --timeout 300sCode language: Bash (bash)Now in the stage environment we are going to deploy only the “new” version of the details-service, not the complete bookinfo application. This is the version we want to test with data from production.

oc apply -n stage-bookinfo -f stage-mesh/stage-detail-v2-deployment.yaml

oc apply -n stage-bookinfo -f stage-mesh/stage-detail-v2-service.yamlCode language: Bash (bash)Service Mesh Peering

As of now, we have two distinct Service Meshes running in one OpenShift cluster, one for production and the other for staging . The next step is to federate these Service Meshes in order to share services. To validate certificates presented by the other mesh, we need the CA root certificate of the remote mesh. The default certificate of the other mesh is located in a ConfigMap called istio-ca-root-cert in the remote mesh’s control plane namespace.

We create a new ConfigMap containing the certificate of the other mesh. The key must still be root-cert.pem. This configuration has to be done symmetrically on both meshes.

PROD_MESH_CERT=$(oc get configmap -n prod-mesh istio-ca-root-cert -o jsonpath='{.data.root-cert\.pem}')

STAGE_MESH_CERT=$(oc get configmap -n stage-mesh istio-ca-root-cert -o jsonpath='{.data.root-cert\.pem}')

sed "s:{{STAGE_MESH_CERT}}:$STAGE_MESH_CERT:g" prod-mesh/stage-mesh-ca-root-cert.yaml | oc apply -f -

sed "s:{{PROD_MESH_CERT}}:$PROD_MESH_CERT:g" stage-mesh/prod-mesh-ca-root-cert.yaml | oc apply -f -Code language: Bash (bash)The actual federation is done by using a ServiceMeshPeer Custom Resource. We need a peering configuration for the prod-mesh and stage-mesh side.

kind: ServiceMeshPeer

apiVersion: federation.maistra.io/v1

metadata:

name: stage-mesh

namespace: prod-mesh

spec:

remote:

addresses:

- prod-mesh-ingress.stage-mesh.svc.cluster.local

discoveryPort: 8188

servicePort: 15443

gateways:

ingress:

name: stage-mesh-ingress

egress:

name: stage-mesh-egress

security:

trustDomain: stage-mesh.local

clientID: stage-mesh.local/ns/stage-mesh/sa/prod-mesh-egress-service-account

certificateChain:

kind: ConfigMap

name: stage-mesh-ca-root-certCode language: YAML (yaml)In spec.remote we configure the address of the peers ingress gateway and optionally the ports handling discovery- and service requests. This address has to be reachable from the local cluster. We also reference our local ingress and egress gateway names handling the federation traffic. The spec.security section configures the remote trust domain, the remote egress gateway client ID and CA Root certificate. It is also important to name the ServiceMeshPeer resource with the name of the remote mesh!

Let’s apply the ServiceMeshPeer resources for the stage and prod environment.

oc apply -f prod-mesh/smp.yaml

oc apply -f stage-mesh/smp.yamlCode language: Bash (bash)Once the ServiceMeshPeer resources are configured on both sides, the connections between the gateways will be enabled. We have to make sure that the connections are established by checking the status:

oc -n prod-mesh get servicemeshpeer stage-mesh -o json | jq .status

oc -n stage-mesh get servicemeshpeer prod-mesh -o json | jq .statusCode language: Bash (bash){

"discoveryStatus": {

"active": [

{

"pod": "istiod-prod-mesh-7b59b74c-xfg88",

"remotes": [

{

"connected": true,

"lastConnected": "2022-01-07T13:50:12Z",

"lastFullSync": "2022-01-10T14:30:11Z",

"source": "10.129.0.97"

}

],

"watch": {

"connected": true,

"lastConnected": "2022-01-07T13:50:27Z",

"lastDisconnect": "2022-01-07T13:48:36Z",

"lastDisconnectStatus": "503 Service Unavailable",

"lastFullSync": "2022-01-08T11:27:41Z"

}

}

]

}

}Code language: Bash (bash)The status value for inbound (remotes[0].connected) and outbound (watch.connected) connections must be true. It may take a moment as the full synchronization happens every 5 minutes. If you don’t see a successful connection status for a while, check the logs of the istiod pod. You can ignore the warning in the istiod logs for “remote trust domain not matching the current trust domain…”.

Exporting Services

Now that the meshes are connected we can export services with an ExportedServiceSet resource. Exporting a service configures the ingress gateway to accept inbound requests for the exported service. Inbound requests are only validated for the client ID configured for the remote egress-gateway.

We must declare every service we want to make available to other meshes. It is possible to select services by name or namespace and we can also use wildcards. Important: We can only export services which are visible to the mesh’s namespace.

In our case we want to export the new version of the details-service from the stage-bookinfo project.

kind: ExportedServiceSet

apiVersion: federation.maistra.io/v1

metadata:

name: prod-mesh

namespace: stage-mesh

spec:

exportRules:

- type: LabelSelector

labelSelector:

namespace: stage-bookinfo

selector:

matchLabels:

app: details

alias:

namespace: bookinfoCode language: YAML (yaml)Important: The name of the ExportedServiceSet must match the ServiceMeshPeer name!

Internally, a unique name is used to identify the service for the other mesh, e.g. details.stage-bookinfo.svc.cluster.local may be exported under the name details.stage-bookinfo.svc.prod-mesh-exports.local. Exported services will only see traffic from the ingress gateway, not the original requestor.

Let’s apply the ExportedServiceSet:

oc apply -f stage-mesh/ess.yamlCode language: Bash (bash)and check the status of the exported services (this may also take a moment to get updated):

oc get exportedserviceset prod-mesh -n stage-mesh -o json | jq .status

{

"exportedServices": [

{

"exportedName": "details.stage-bookinfo.svc.prod-mesh-exports.local",

"localService": {

"hostname": "details.stage-bookinfo.svc.cluster.local",

"name": "details",

"namespace": "stage-bookinfo"

}

}Code language: Bash (bash)Importing Services

Importing services is very similar to exporting services and lets you explicitly specify which services exported from another mesh should be accessible within your service mesh.

The ImportedServiceSet custom resource is used to select services for import. Importing a service configures the mesh to send requests for the service to the egress gateway specified in the ServiceMeshPeer resource.

We are going to import the previously exported details-service from the stage-mesh in order to mirror traffic to the service:

apiVersion: federation.maistra.io/v1

kind: ImportedServiceSet

metadata:

name: stage-mesh

namespace: prod-mesh

spec:

importRules:

- importAsLocal: false

nameSelector:

alias:

name: details-v2

namespace: prod-bookinfo

name: details

namespace: stage-bookinfo

type: NameSelectorCode language: YAML (yaml)As you can see, we are importing the service with an alias name and namespace.

oc apply -f prod-mesh/iss.yamlCode language: Bash (bash)And again checking the status:

oc -n prod-mesh get importedservicesets stage-mesh -o json | jq .status

{

"importedServices": [

{

"exportedName": "details.stage-bookinfo.svc.prod-mesh-exports.local",

"localService": {

"hostname": "details-v2.prod-bookinfo.svc.stage-mesh-imports.local",

"name": "details-v2",

"namespace": "prod-bookinfo"

}

}

]

}Code language: Bash (bash)If you import services with importAsLocal: true, the domain suffix will be svc.cluster.local, like your normal local services. So, if you already have a local service with this name, the remote endpoint will be added to this service’s endpoints and you load balance across your local and imported services.

Routing traffic to imported services

We are almost done. So far we have set up two Service Meshes with distinct administrative domains. We’ve also deployed our workload and included it in the mesh. Then we have federated those two meshes with a peering configuration and have exported and imported the new version of the details-service.

We can treat our imported service like any other service and i.e. route traffic by applying VirtualServices, DestinationRules etc.

What we initially wanted to achieve is mirroring the traffic going to the production details-service to the new version of the details-service running in the stage environment, in order to test this new version with real data from production.

This can be done by simply applying a VirtualService for the details-service:

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: details

namespace: prod-bookinfo

spec:

hosts:

- details.prod-bookinfo.svc.cluster.local

http:

- mirror:

host: details-v2.prod-bookinfo.svc.stage-mesh-imports.local

route:

- destination:

host: details.prod-bookinfo.svc.cluster.local

subset: v1

weight: 100Code language: YAML (yaml)oc apply -n prod-bookinfo -f prod-mesh/vs-mirror-details.yamlCode language: Bash (bash)Instead of mirroring we could also splitt traffic and perform a canary rollout across the two meshes, sending i.e. 20% of traffic to the stage environment. With this approach we could also gradually migrate workloads from one cluster to another.

kind: VirtualService

apiVersion: networking.istio.io/v1alpha3

metadata:

name: details

namespace: prod-bookinfo

spec:

hosts:

- details.prod-bookinfo.svc.cluster.local

http:

- route:

- destination:

host: details.prod-bookinfo.svc.cluster.local

subset: v1

weight: 80

- destination:

host: details-v2.prod-bookinfo.svc.stage-mesh-imports.local

weight: 20Code language: YAML (yaml)oc apply -f prod-mesh/vs-split-details.yamlCode language: Bash (bash)Now, to see federation in action, we need to create some load in the bookinfo app in prod-mesh.

BOOKINFO_URL=$(oc -n prod-mesh get route istio-ingressgateway -o json | jq -r .spec.host)

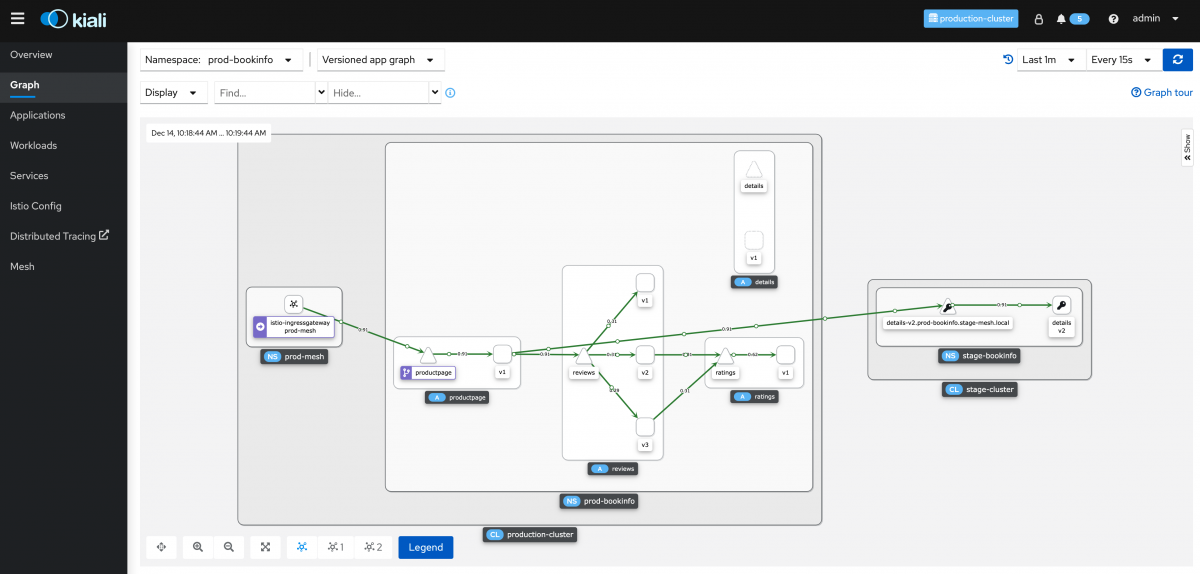

while true; do sleep 1; curl http://$BOOKINFO_URL/productpage &> /dev/null; doneCode language: Bash (bash)The best way to visualize the traffic flowing through the mesh is by using Kiali. Kiali is a management and visualization dashboard, rendering traffic from and to services. The visualization is based on metrics pulled from the local mesh.

Open the Kiali UI for the prod-mesh and stage-mesh environments and take a look at the Graph page. You can adjust the graph, showing request percentages, response times, animate traffic etc.

oc get route -n prod-mesh kiali -o jsonpath='{.spec.host}'Code language: Bash (bash)

Conclusion

With OpenShift Service Mesh federation, it is possible to set up several services meshes in one or more OpenShift clusters. It is a “zero trust” approach with distinct administrative domains and sharing only information with explicit configuration, providing necessary security.

OpenShift Service Mesh federation enables some exciting new use cases by extending the overall capabilities and leveraging the powerful underlying hybrid cloud container platform.

You don’t have an OpenShift environment? Get started with the Developer Sandbox for Red Hat OpenShift.